1. Introduction

1.1. Overview

Faim is a finite element solver optimized for solid mechanics simulations of bone, including strength and stiffness determinations, directly from three-dimensional medical image data such as micro-CT. Faim uses the mesh-free method, which is a highly memory efficient method of finite element analysis. This makes it possible to solve finite element models with very large numbers of degrees of freedom derived directly from high resolution scans.

The Faim Finite Element solver has an established record of being used in scientific studies. See the bibliography for selected studies.

1.2. How to read this manual

-

If you need to install Faim, refer to Installation in this chapter.

-

Experienced users may want to read What is new in version 9, and then go directly to the sections indicated there.

-

For new users, start by reading the section Work flow for finite element analysis. This will give you an overview of finite element analysis and the Numerics88 software tools.

-

Next, we recommend that you jump first to An introductory tutorial: compressing a solid cube. It will take you through the typical steps of generating a model, verifying it, solving it, and analyzing the results. This is a good introduction to how everything fits together, and will help to put things into context.

-

The intervening chapters (preceeding the Tutorials chapter) should be used as reference material. You probably don’t want to read them from beginning to end in one sitting with the intention of retaining everything; however, you should at least skim through them to be aware of the options, limitations and potential pitfalls.

-

If you are a user who is familiar with

n88modelgeneratorand who is interested in creating your own custom models, start with the tutorial, Compressing a cube revisited using vtkbone. And then move on to the more advanced tutorials dealing withvtkbone.

1.3. What is new in version 9

Version 9 introduces the following new features:

-

n88pistoia allows the user to set different critical strain and critical volume values when estimating failure load.

-

Code segments have been updated for newer versions of VTK (8.2.0) and Python (3.7). For example, the print statements used in Python 3 are different than Python 2.

1.4. Installation

In version 9, the installation of Faim is more modular than in previous versions.

1.4.1. Install the solvers and n88modelgenerator

The solvers and n88modelgenerator are the non-open sourced components of Faim and require a license file to run. They are bundled together into installers that can be downloaded from https://numerics88.com/downloads/ .

The Linux installer will be named something like faim-9.0-linux.sh. It can be installed

with the following command for a single user:

bash faim-9.0-linux.sh

For a system-wide installation, add sudo:

sudo bash faim-9.0-linux.sh

If you want to specify a particular install location, use the --prefix flag, for example

bash faim-9.0-linux.sh --prefix=$HOME/Numerics88

The Windows installer will be named something like faim-9.0-windows.exe. Simply run

it to install.

The Mac installer is a disk image with a name like faim-9.0-windows.dmg. Double-click

to mount it, and then drag the Faim icon to the Applications folder, or to any other

convenient location.

For all operating systems, it is possible to install Faim system-wide using an administrator account or for a single user, for which administrator rights are not needed. It is also possible to install it multiple times on a single machine, so that each user has their own installation. The installation directory can also be freely renamed and moved around.

1.4.2. How to run the solvers

To run the solvers and n88modelgenerator, you may use any of the following methods:

-

Run them with the complete path. For example

/path/to/faim/installation/n88modelgenerator

or, on Windows

"c:\Program Files\Faim 9.0\n88modelgenerator"

-

Permanently add the directory where they are installed to your PATH variable. The exact method depends on your operating system.

-

Run the script

setenvin a Terminal (or Command Prompt on Windows) to set the PATH just for that Terminal. On Linux and macOS, this is done as followssource /path/to/faim/installation/setenv

On Windows, if you are using a Command Prompt, then you can run

"c:\Program Files\Faim 9.0\setenv.bat"

while for Windows PowerShell, the equivalent is

. "c:\Program Files\Faim 9.0\setenv.ps1"

|

|

In version 9, the solvers and n88modelgenerator are statically linked.

Setting LD_LIBRARY_PATH (Linux) or DYLD_LIBRARY_PATH (macOS) is no longer required.

|

1.4.3. Install a license file

To receive a license file, you must run one of the Faim programs with the --license_check option, and send the resulting UUID to skboyd@ucalgary.ca . For example,

> n88modelgenerator --license_check n88modelgenerator Version 9.0 Copyright (c) 2010-2020, Numerics88 Solutions Ltd. Host UUID is DFD0727B-89AA-4808-B03F-E73E80ABFE64

When you receive a license file, which is a short text file, you can copy it to one of several possible locations where the software can find it. These locations are:

-

a subdirectory

licensesof the installation directory, or -

the location specified by the environment variable

NUMERICS88_LICENSE_DIR, if set, -

for an individual user, a directory

Numerics88/licenses(Numerics88\licenseson Windows) in their home directory, or -

on Linux,

/etc/numerics88/licenses, or -

on macOS,

/Library/Application Support/Numerics88/licenses, or$HOME/Library/Application Support/Numerics88/licenses, or -

on macOS,

/Users/Shared/Numerics88/licenses.

You may have multiple license files installed at once.

1.4.4. Install n88tools and vtkbone

The solvers and n88modelgenerator are most conveniently used together with

a collection a command-line utilities called n88tools. n88tools

is implemented in Python, and depends on the library vtkbone. Additionally,

vtkbone can be used to create custom model generation and processing scripts

in Python, as will be discussed in a subsequent chapter.

n88tools and vtkbone are open-source. If you wish, you can download the

source code from https://github.com/Numerics88/ and compile them yourself,

using whatever installation of Python is most convenient for you.

Most users however, will not want to go through the hassle of compiling them.

The quickest way to install them is via Anaconda Python, which is supported

on Linux, Windows, and macOS. An advantage of using Anaconda Python is

that the very large repository of mathematical and scientific Python

packages available in Anaconda Python can be used together with Faim.

To install n88tools and vtkbone in Anaconda Python, follow these steps:

-

Install Anaconda Python from https://www.anaconda.com/distribution/ . It is also possible to install Miniconda (http://conda.pydata.org/miniconda.html). Miniconda is identical to Anaconda, except that instead of starting with a very large set of installed python packages, it starts with a minimal set. You can of course add or subtract packages to suit your needs. Anaconda Python can be installed either system-wide, or just for an individual user. Faim works with either arrangement.

-

Create an Anaconda environment in which to run Faim. To learn about Anaconda environments, we recommend that you take the conda test drive at http://conda.pydata.org/docs/test-drive.html . Creating an Anaconda environment for Faim is optional; you could alternatively install

n88toolsandvtkbonedirectly in the root environment of Anaconda. However, using a dedicated enivornment greatly helps with avoiding possible conflicts, where for examplevtkbonemay want a specific version of a dependency, while some other Python package that you want to use requires a different version of the same dependency. In addition, you can create separate environments for different versions ofn88toolsandvtkbone, and easily switch between them. To create an Anaconda environment namedfaim-9.0and installn88toolsversion 9.0 andvtkboneall in one step, run the following command:conda create --name faim-9.0 --channel numerics88 --channel conda-forge python=3.7 n88tools numpy scipy

To use n88tools, you simply activate the Anaconda environment. On Linux and macOS,

this is done with

conda activate faim-9.0

On Windows, the command is

activate faim-9.0

You will have to activate the environment in each Terminal (or Command Prompt) in which you want to use Faim.

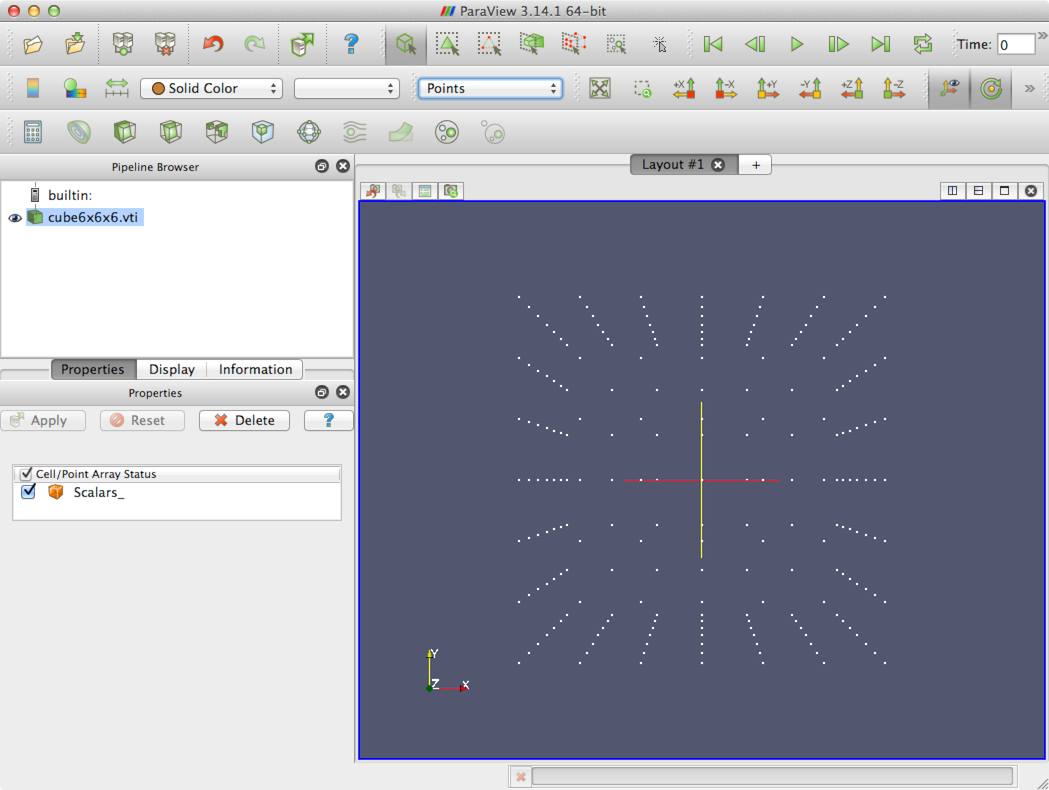

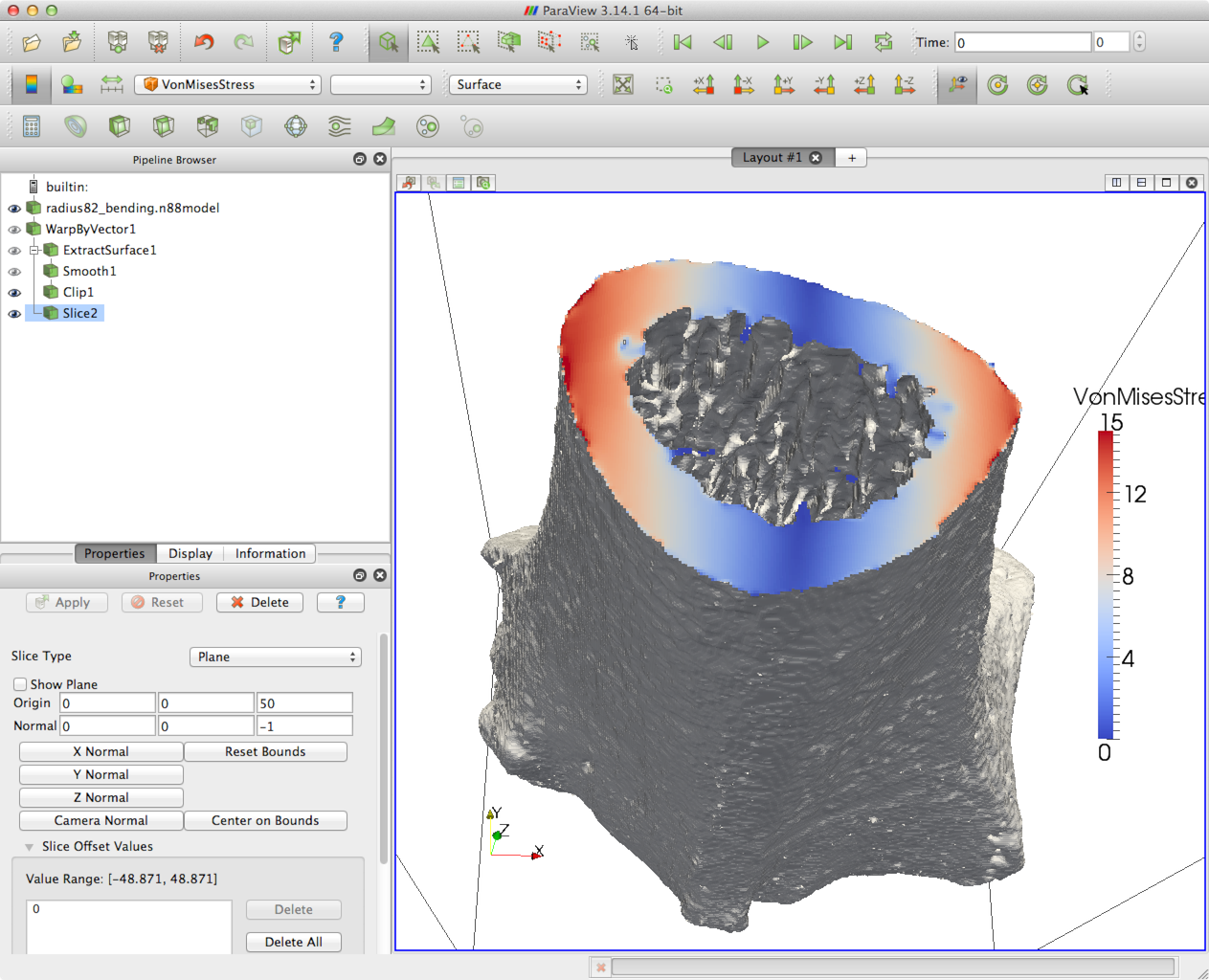

1.4.5. Install the Numerics88 plugins for ParaView

ParaView can be used for interactive rendering of Faim finite elements models.

For this purpose, Numerics88 provides plugins for ParaView. These allow

ParaView to open n88model files (as well as Scanco AIM files). The plugins

can be downloaded from http://numerics88.com/downloads/ . You can unzip

the plugins into any convenient directory, and you can move and rename

the plugin directory as you like.

|

|

The ParaView plugins are specific to a particular version of ParaView and can not be loaded into a different version of ParaView. |

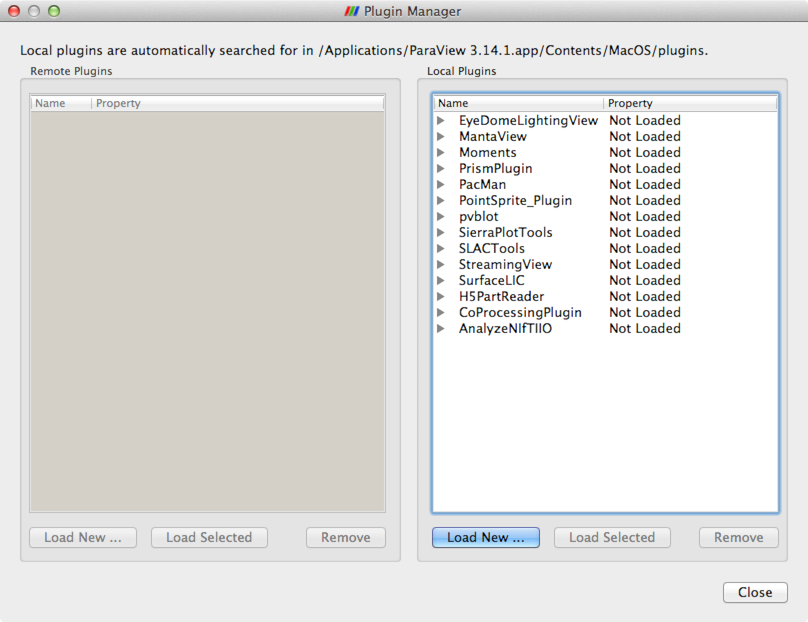

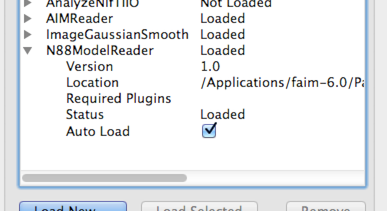

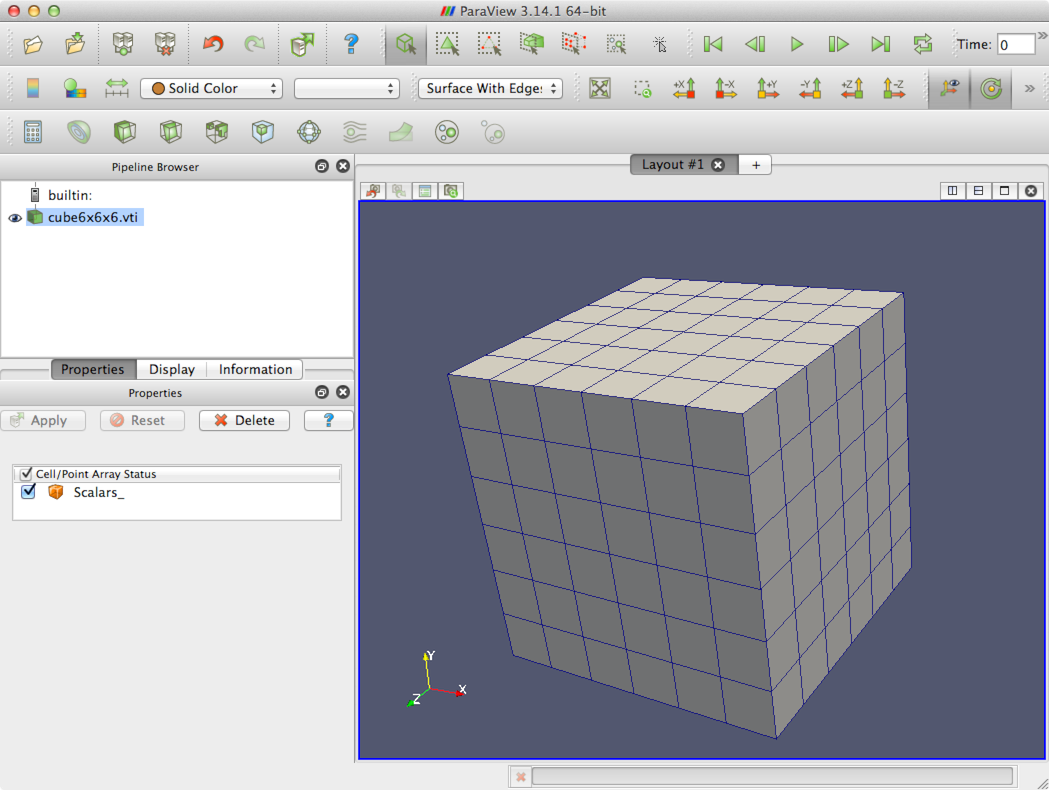

To load the plugins, open ParaView, and select the menu item . The Plugin Manager will appear, as shown in Figure 1.

Click Load New… and navigate to the location of the plugins. Select one of the plugins, and then click OK. You will need to repeat this for each of the plugins, in particular for

-

libAIMReader, -

libImageGaussianSmooth, and -

libN88ModelReader.

They are now loaded, and you should be able to read to corresponding file

formats. You may want to select Auto Load for each plugin so that you don’t need to load them every time you open Paraview. To do this, click the drop-down arrow beside the plugin in the

Plugin Manager, and make sure that Auto Load is selected. This is

shown in Figure 2.

1.4.6. Additional downloads

A PDF version of this manual can be downloaded from http://numerics88.com/documentation/ .

Example data files, together with scripts for the tutorials in this manual, can be downloaded from http://numerics88.com/downloads/ .

1.5. Work flow for finite element analysis

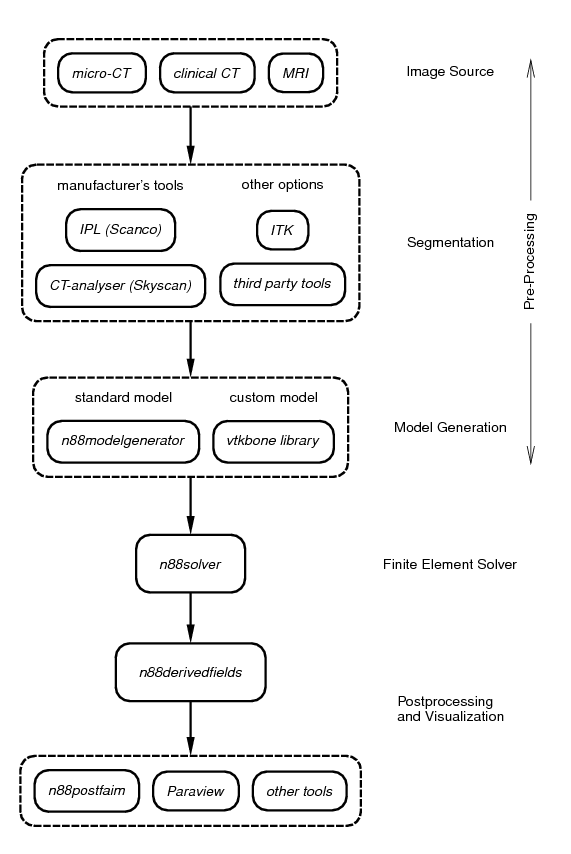

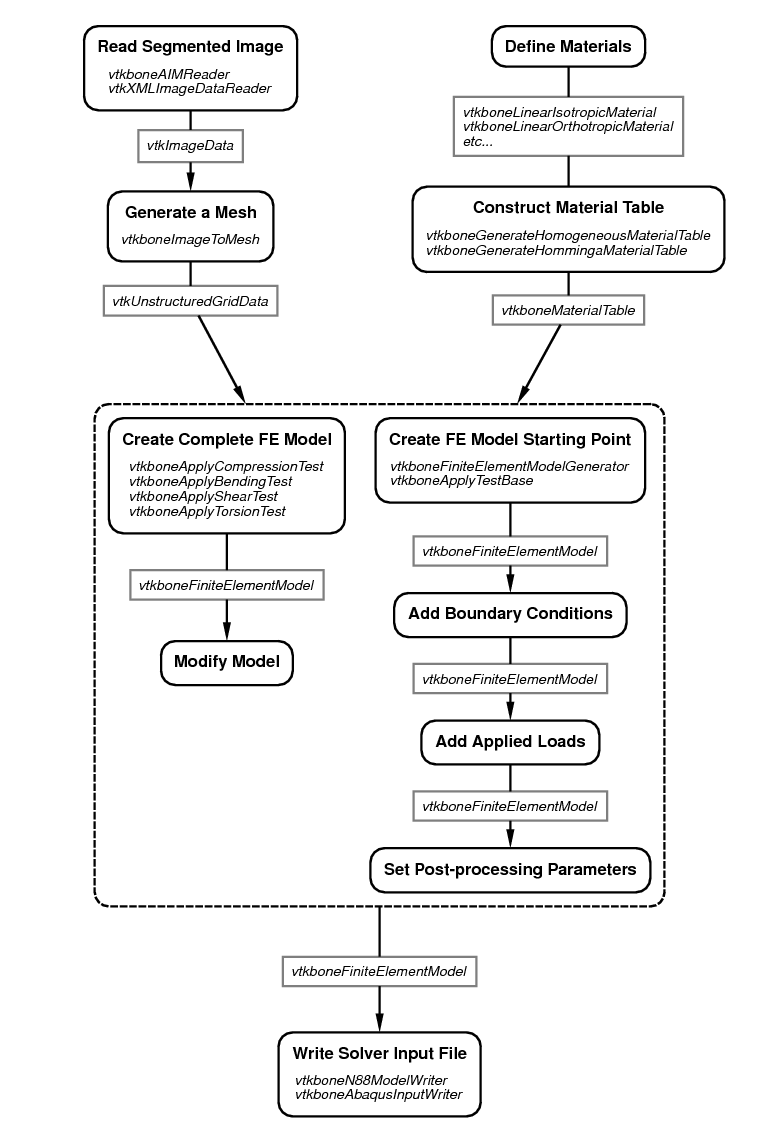

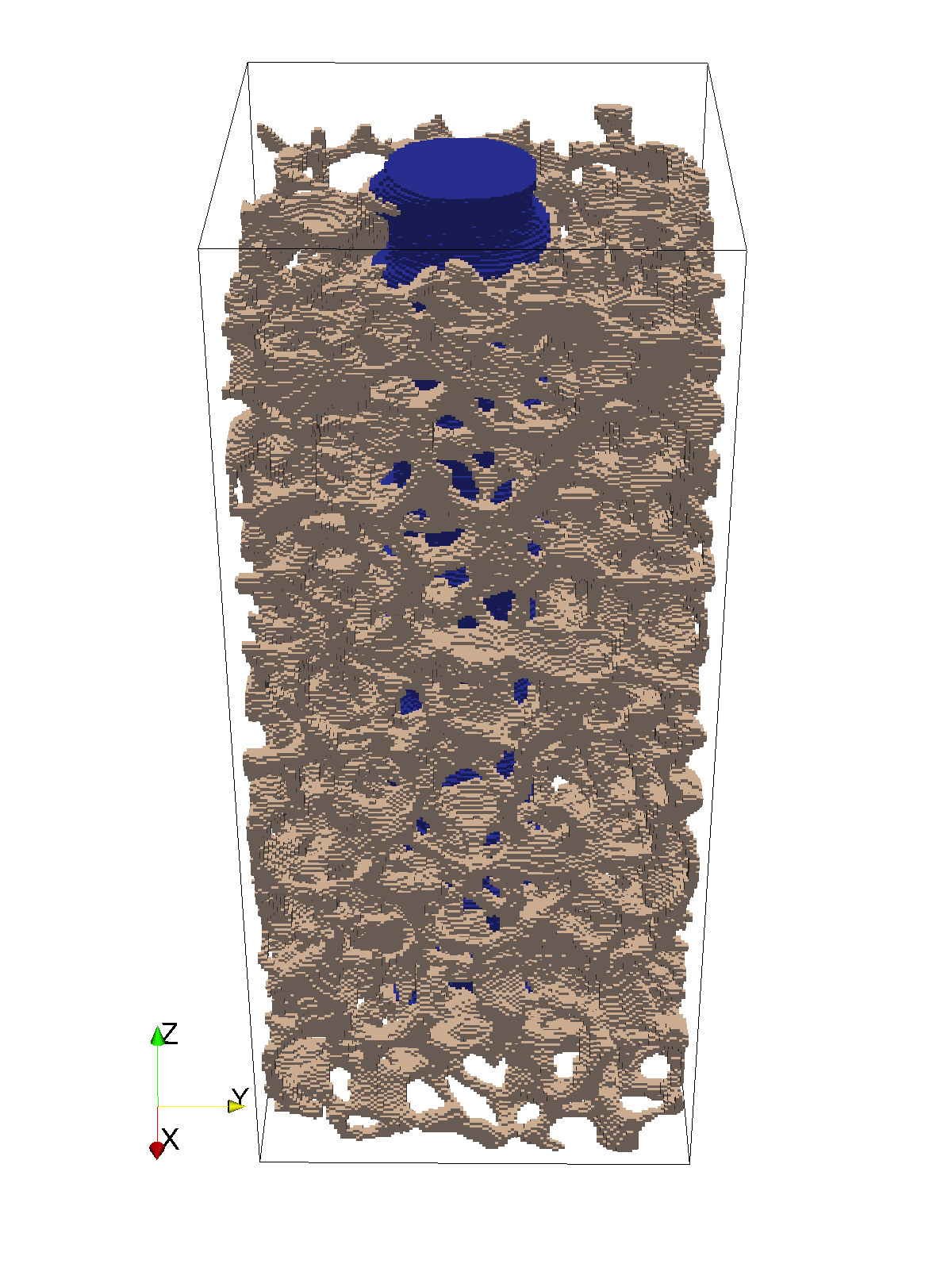

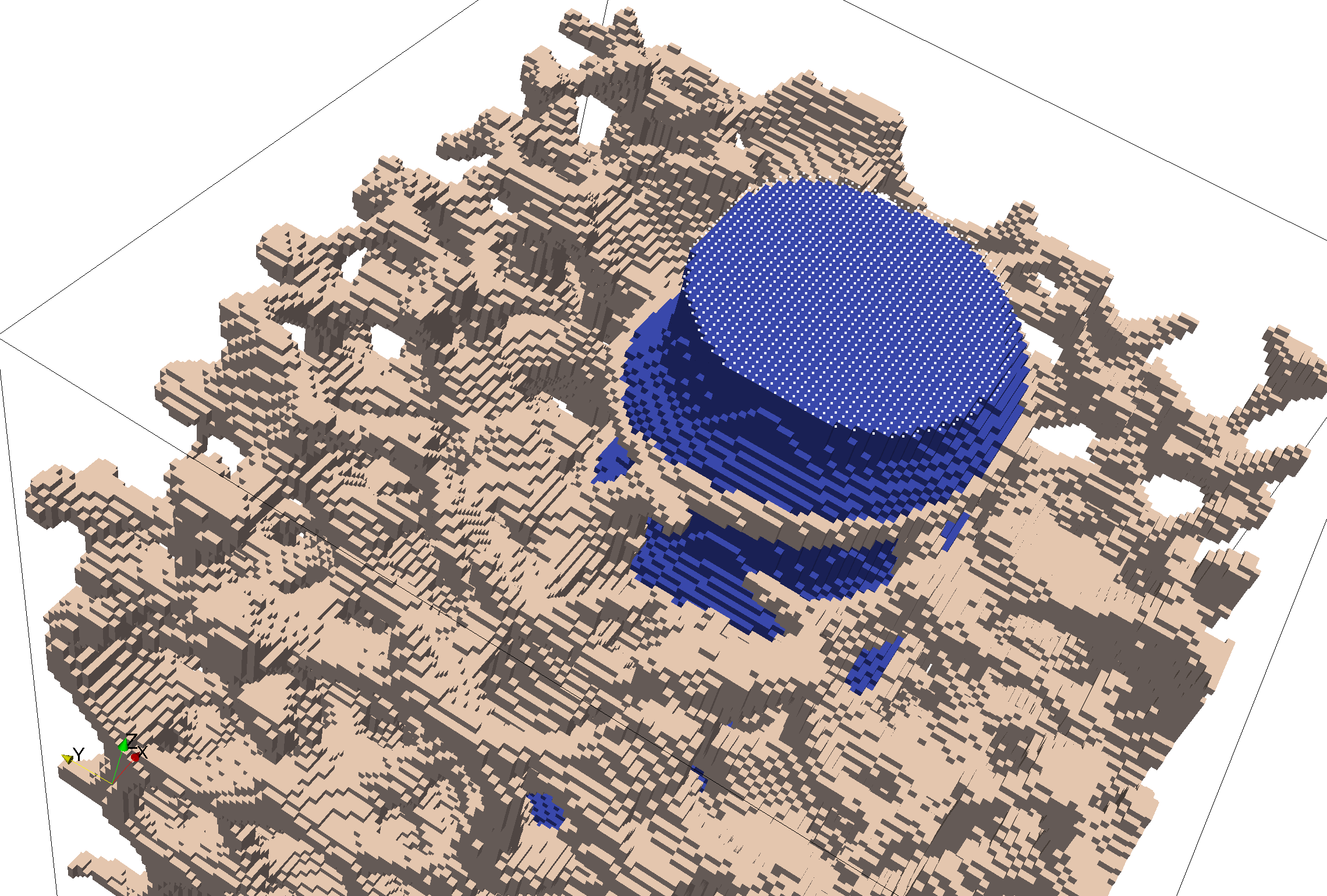

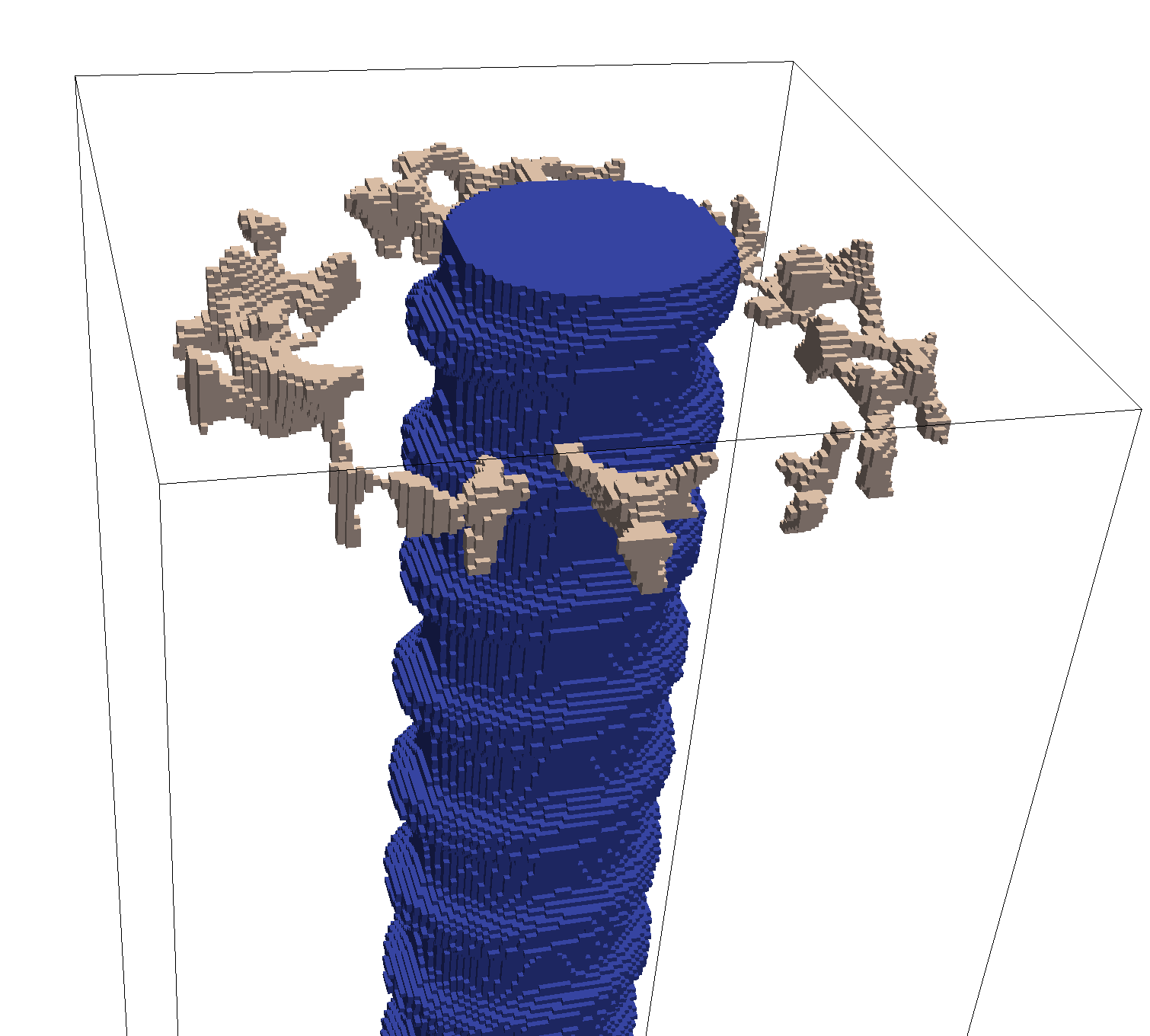

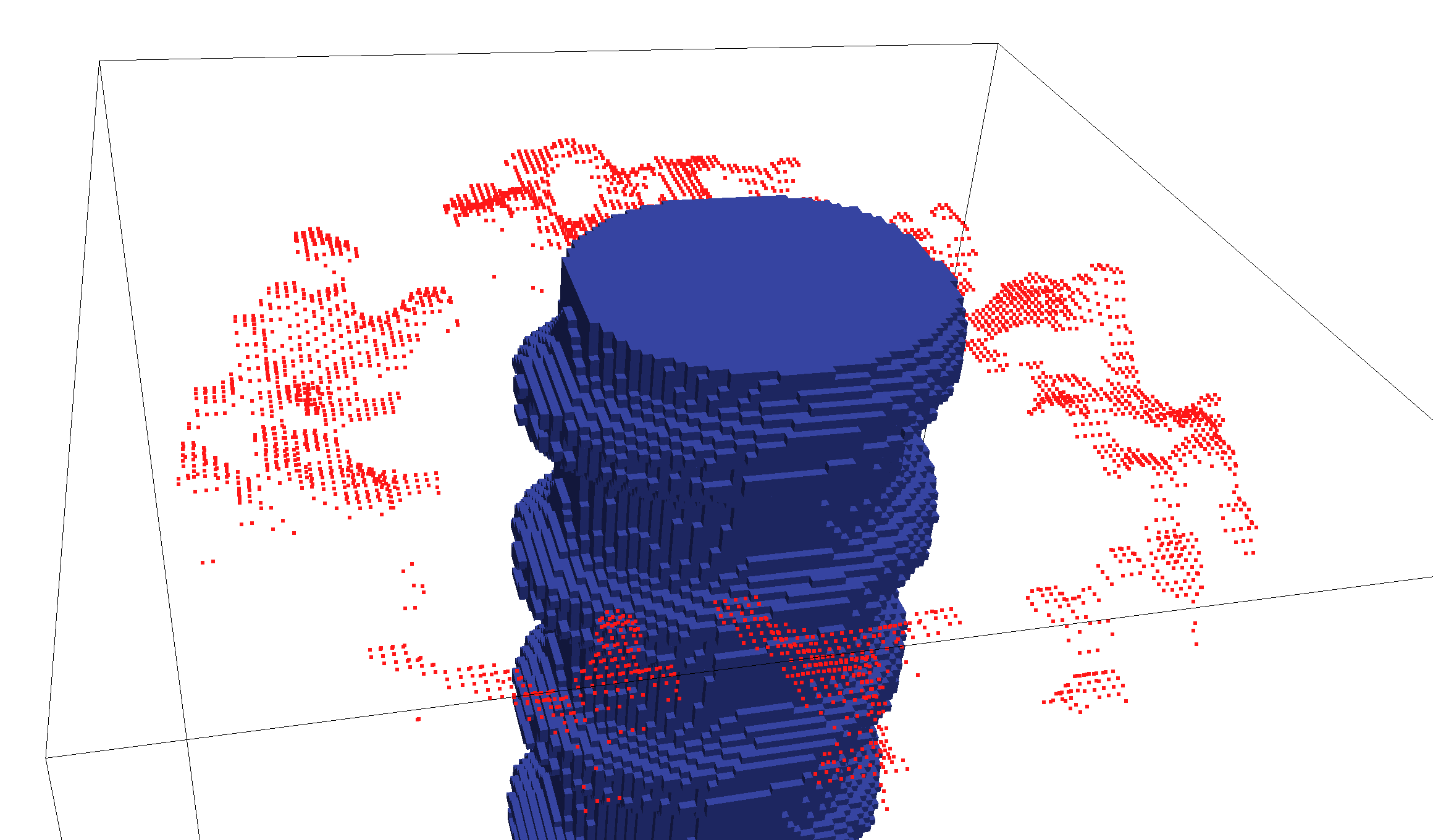

There are several steps involved in applying finite element analysis to 3D medical image data. These steps are diagrammed in Figure 3. Generally they can be categorized as pre-processing, solving, and post-processing. Faim integrates these steps to simplify the work flow as much as possible, while retaining flexibility and customizability in preparing, solving and analyzing finite element problems derived from micro-CT data.

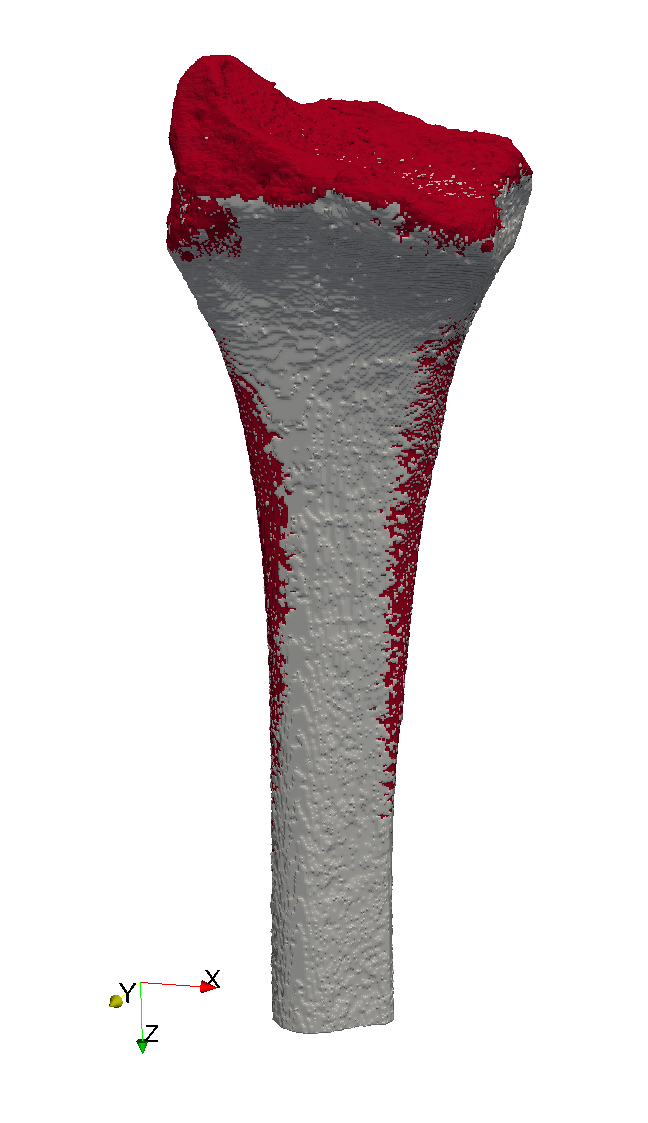

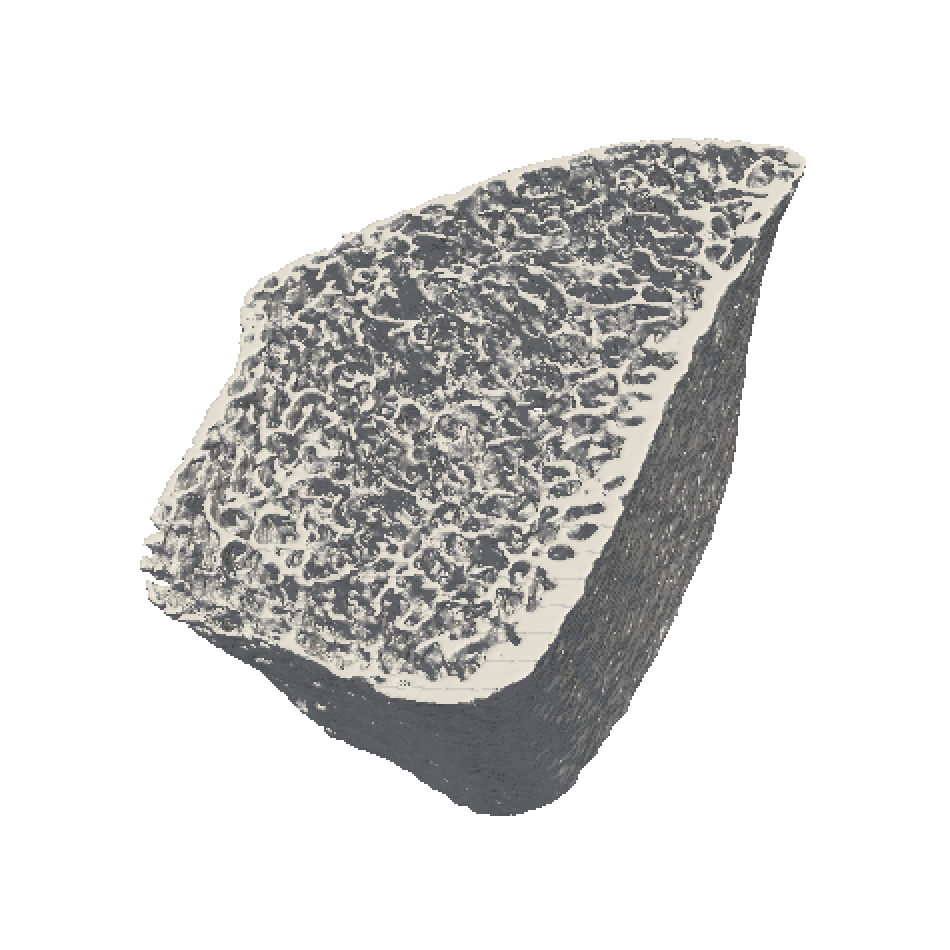

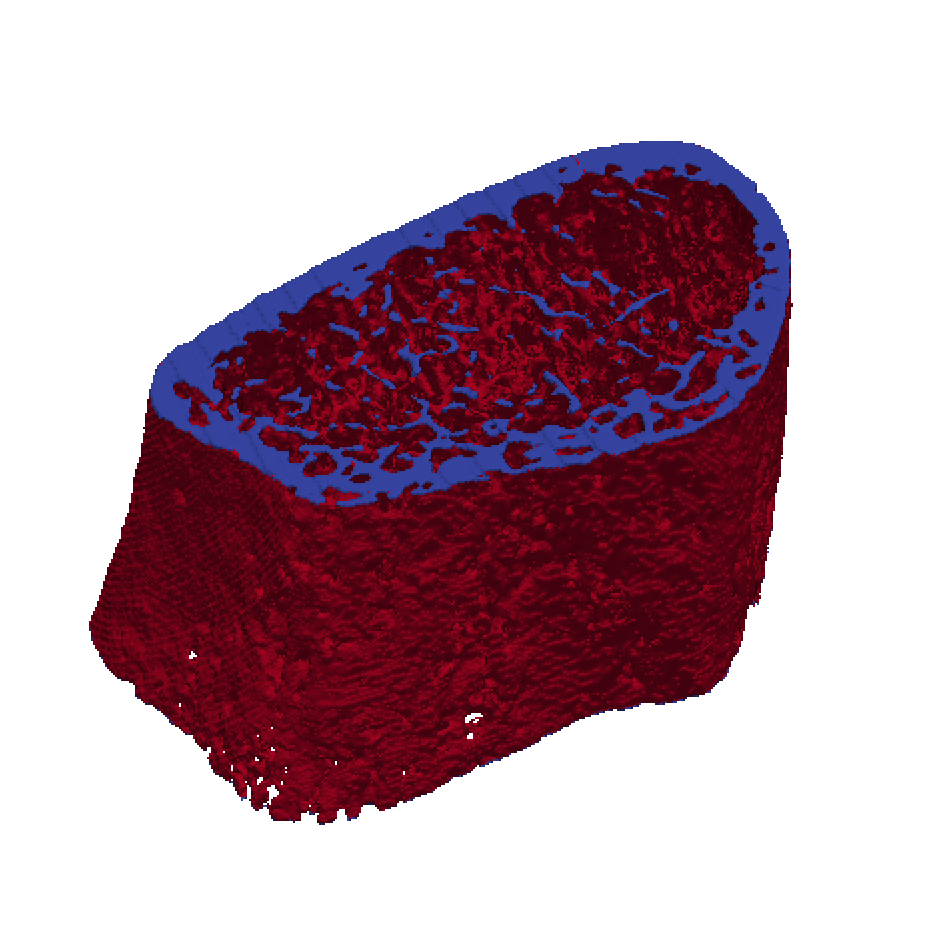

1.5.1. Segmentation

The first step is to segment your 3D medical image data. Although in principle any type of 3D image data can be analyzed, we focus on the use of micro-CT images of bone here. During segmentation, bone and other relevant structures in the CT image are labelled and identified. Segmentation is a very broad and complex field. Because of this, Numerics88 software does not attempt to provide all solutions. Instead, we recommend that you use the segmentation tool provided by the manufacturer of your medical image equipment. This has the advantage that it will be well-integrated into the scanning workflow. In the case of Scanco systems, the tool is Image Processing Language (IPL); for Skyscan systems, CT-analyzer provides segmentation functionality.

In cases where your segmentation problem is unusually tricky and beyond the abilities of the manufacturer’s tools, there are many third party solutions available.

Whichever tool you use to perform the segmentation, the result should be a 3D image file with integer values. These integer values are referred to as "Material IDs", because they will subsequently be associated with abstract mathematical material models. Material ID zero indicates background material (often air, but sometimes also other material, such as marrow in bone) that can be assumed to have negligible stiffness. Voxels with material ID zero will not be converted to elements in the FE model. Other values can be associated freely with particular materials or material properties as required.

1.5.2. Model generation

Model generation is the process of converting a segmented image to a geometrical representation suitable for finite element analysis, complete with suitable constraints such as displacement boundary conditions and applied loads.

A number of steps are required for complete model generation.

- Meshing

-

Meshing is the process of creating a geometric representation of the model as a collection of finite elements. For micro-CT data, we typically convert each voxel in the input image to a hexahedral (box-shaped) element in the FE model. Each of the corners of the voxel becomes a node in the FE model. Voxels labelled as background (e.g. 0) are ignored.

|

|

There may be scenarios in which it is desirable to have FE models that have elements smaller or larger than the voxels of the available image. Larger elements lead to smaller FE models (i.e. fewer degrees of freedom) which may solve faster, at the expense of accuracy. Smaller elements allow the forces and displacements to vary more smoothly (that is, with higher resolution) in the FE model, at the cost of increased solution time and memory requirements. To alter the FE model from the original 3D image, one typically resamples the original image to the desired resolution employing some sort of interpolation. |

- Material Assignment

-

Material assignment is the process of mapping material IDs to mathematical models for material properties. For example, an isotropic material is specified by its Young’s modulus (E) and Poisson’s ratio (ν).

- Assigning Boundary Conditions and Applied Loads

-

Boundary conditions and applied loads implement a specific mechanical test. The tool used will typically translate a desired physical action, such as a force or a displacement applied to a specific surface, into a set of constraints on a set of nodes or elements.

Faim provides two tools for model generation:

- n88modelgenerator

-

n88modelgeneratoris a program that can generate a number of standard tests (e.g. axial compression, confined compression, etc…).n88modelgeneratorhas a number of options that allow for flexibility in tweaking these standard models.n88modelgeneratoris the easiest method of generating models. - vtkbone

-

vtkboneis a collection of custom VTK objects that you can use for creating models, and is a more advanced alternative to usingn88modelgenerator. In order to use them, you need to write a program or script (typically in Python). Some learning curve is therefore involved, as well as the time to write, debug and test your programs. The advantage is nearly infinite customizability. VTK objects, includingvtkboneobjects, are designed to be easily chained together into execution pipelines. In this documention, we will give many examples of usingvtkbonein Python scripts.

1.5.3. Finite element solver

The finite element solver takes the input model and calculates a solution of the displacements and forces on all the nodes, subject to the specified constraints.

As finite element models derived from micro-CT can be very large, the memory efficiency and speed of the solver are important. Faim uses a mesh-free preconditioned conjugate gradient iterative solver. This type of solver is highly memory efficient.

|

|

Faim is currently limited to small-strain solutions of models with linear and elastoplastic material definitions. This is adequate for most bone biomechanics modelling purposes, as bone undergoes very little strain before failure. However, if you need to accurately model large deformations, the Faim solver is not an appropriate tool. |

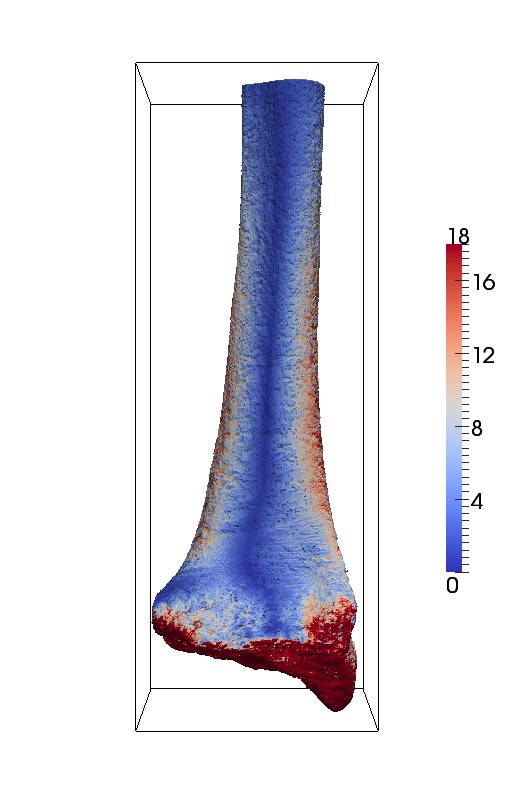

1.5.4. Post-processing and visualization

Post-processing and visualization can take many different forms, depending on the goals of your analysis. For example, you may want to identify locations of stress variations, in which case, a visualization tool is likely the best approach. Or, you may want to obtain a basic output such as an overall stiffness. A number of common post-processing operations are performed by Faim.

Visualization is important even in cases where ultimately you want one or more quantitative values. Visualization provides a conceptual overview of the solution, and may allow one to quickly identify incorrect or invalid results due to some deficiency in the input model. Many visualization software packages exist. If you don’t have an existing favourite package, we recommend the use of ParaView, which is an open-source solution from Kitware. The Faim distribution includes plug-ins for ParaView which allow ParaView to directly open Faim file types.

1.6. Units in Faim

Faim is intrinsically unitless; you may use any system of units that are self-consistent.

Nearly all micro-CT systems use length units of millimetres (mm). With forces in Newtons (N), the consistent units for pressure are then megapascals (MPa), as 1N/(1mm)2 = 1MPa. Young’s modulus is an example of a quantity that has units of pressure. In the examples, we will consistently use these units (mm, N, MPa).

2. Preparing Finite Element Models With n88modelgenerator

2.1. Running n88modelgenerator

n88modelgenerator is a tool to create a number of different standard mechanical test simulations directly from segmented 3D images. This tool is designed to be simple to use and in many cases, requires no more than specifying a test type and an input file, like this

n88modelgenerator --test=axial mydata.aim

This will generate a file mydata.n88model that is suitable as input to the

Faim solver.

|

|

For an introduction to n88modelgenerator, we recommend that you start first with An introductory tutorial: compressing a solid cube. Getting your hands dirty as it were with the program is often the best way to learn. Once you have some familiarity with the program, the following documentation will make much more sense. |

The models produced by n88modelgenerator can be modified and tuned by specifying a number of optional parameters. For example, here n88modelgenerator is run to create an axial test with a specified compressive strain and Young’s modulus:

n88modelgenerator --test=axial --normal_strain=-0.01 --youngs_modulus=6829 test25a.aim

Parameters can be specified either on the command line, or in a configuration

file. Command line arguments must be preceded with a double dash (--). The argument

value can be separated from the argument name with either an equals sign (=)

or a space. A complete list of all possible arguments is given in

the Command Reference chapter.

You can also run n88modelgenerator with the --help option to get a complete

list of possible parameters.

All parameters except the input file name are

optional; a default value will be used for unspecified parameters.

To use a configuration file, run n88modelgenerator as follows.

n88modelgenerator --config=mytest.conf test25a.aim

Here we are specifying the name of the input file on the command line.

This is convenient if we want to use the same configuration file for

multiple input files. It is also possible to specify the name of the

input file in the configuration file, using the option

input_file. The output file name is left

unspecified, so a default value will be used (test25a_axial.n88model in

this case), but it can also be specified either on the command line or

in the configuration file if desired.

The configuration file format is one line per option: the

parameter name (without leading dashes), followed by the equals character (=),

followed by the parameter value.

Lines beginning with # are ignored.

The following configuration file is exactly equivalent to the example

above using command line parameters.

# This is the example configuration file "mytest.conf" test = axial normal_strain = -0.01 youngs_modulus = 6829

|

|

If a parameter is specified on both the command line and in a configuration file, the command line value takes precedence. |

n88modelgenerator can accept several different input file formats. Refer to the Command Reference chapter for details.

|

|

If you have an input image in a different format, there are many

possible solutions. One is to convert the file format, possibly with

ParaView, which can read a large number of formats. VTK can also be

used to convert many image files. For example, the following python script

will convert a MetaImage format file to a VTK import vtk

reader = vtk.vtkMetaImageReader()

reader.SetFileName ("test.mhd")

writer = vtk.vtkXMLImageDataWriter()

writer.SetFileName ("test.vti")

writer.SetInputConnection (reader.GetOutputPort())

writer.Update()

For more information on scripting, refer to Preparing Finite Element Models With vtkbone. |

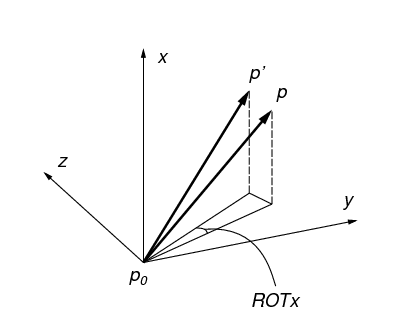

2.2. Test orientation

To allow for flexibility in defining the orientation of applied tests, two coordinate frames are defined:

- Data Frame

-

The Data Frame is the coordinate frame of the data. The input and output data use the same coordinate system.

- Test Frame

-

The Test Frame is the coordinate frame in which the test boundary conditions are applied. Tests are defined with a constant orientation in the Test Frame.

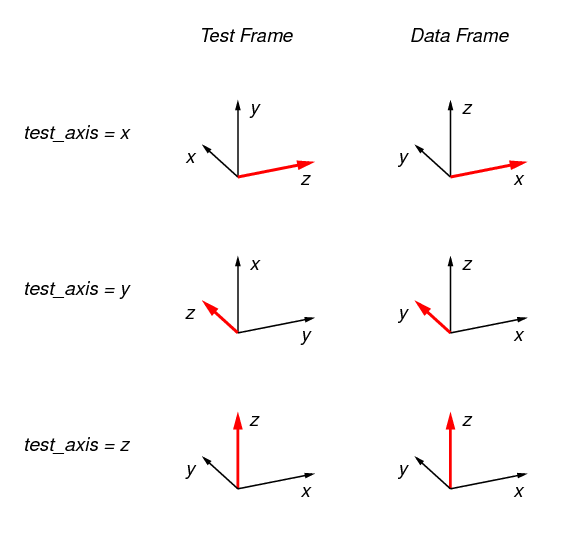

The relationship between the Test Frame and the Data Frame is set by the value

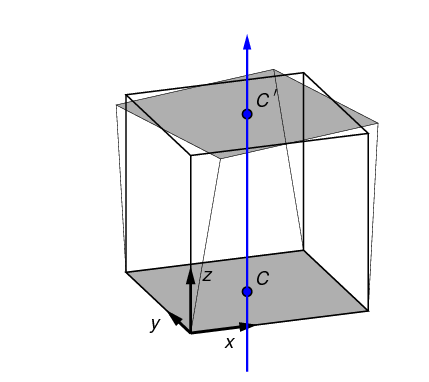

of the parameter test_axis according to the following

figure. In the figure, the red-colored axis is the test axis. What this

implies exactly depends on the kind of test that is being applied. For

example, for a compression test, the direction of compression is along the test

axis. The test axis is always the z axis in the Test Frame, and always equal

to value of the parameter test_axis in the Data Frame. The

default value for test_axis is z ; for this case the Test Frame and the

Data Frame coincide, as shown.

In the test descriptions, references to “Top” refer to the maximum z surface in the Test Frame; References to “Bottom” refer to the minimum z surface in the Test Frame. Likewise “Sides” refers to the surfaces normal to the x and y axes in the Test Frame.

The Test Frame is used only for the specification of tests (i.e. a specific configuration of boundary

conditions and applied loads) within n88modelgenerator and vtkbone. When the

model is written to disk as an n88model file, it is encoded

with reference to the original Data Frame. As a consequence

post-processing and visualization are in the original Data Frame.

|

|

In terms of rotations, for a setting of test axis = x, the transformation from the Test Frame to the Data Frame is a rotation of -90º about the x axis, followed by a -90º rotation about the z' axis. For a setting of test axis = y, the transformation from the Test Frame to the Data Frame is a rotation of 90º about the y axis, followed by a 90º rotation about the z' axis. These transformation sequences are of course not unique. For most applications, it is not necessary to know these transformations; it should be sufficient to refer to Figure 4. |

2.3. Standard tests

|

|

Axis directions in the following test descriptions are in the Test Frame. All the standard tests can be applied along any axis of the data by specifying test_axis, as described in the previous section. |

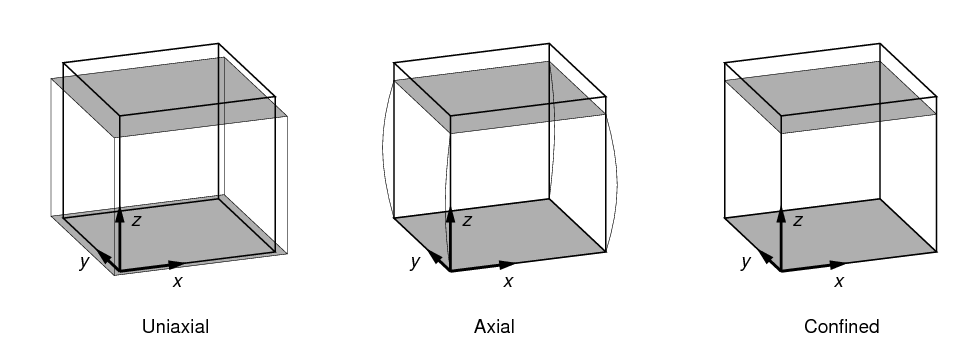

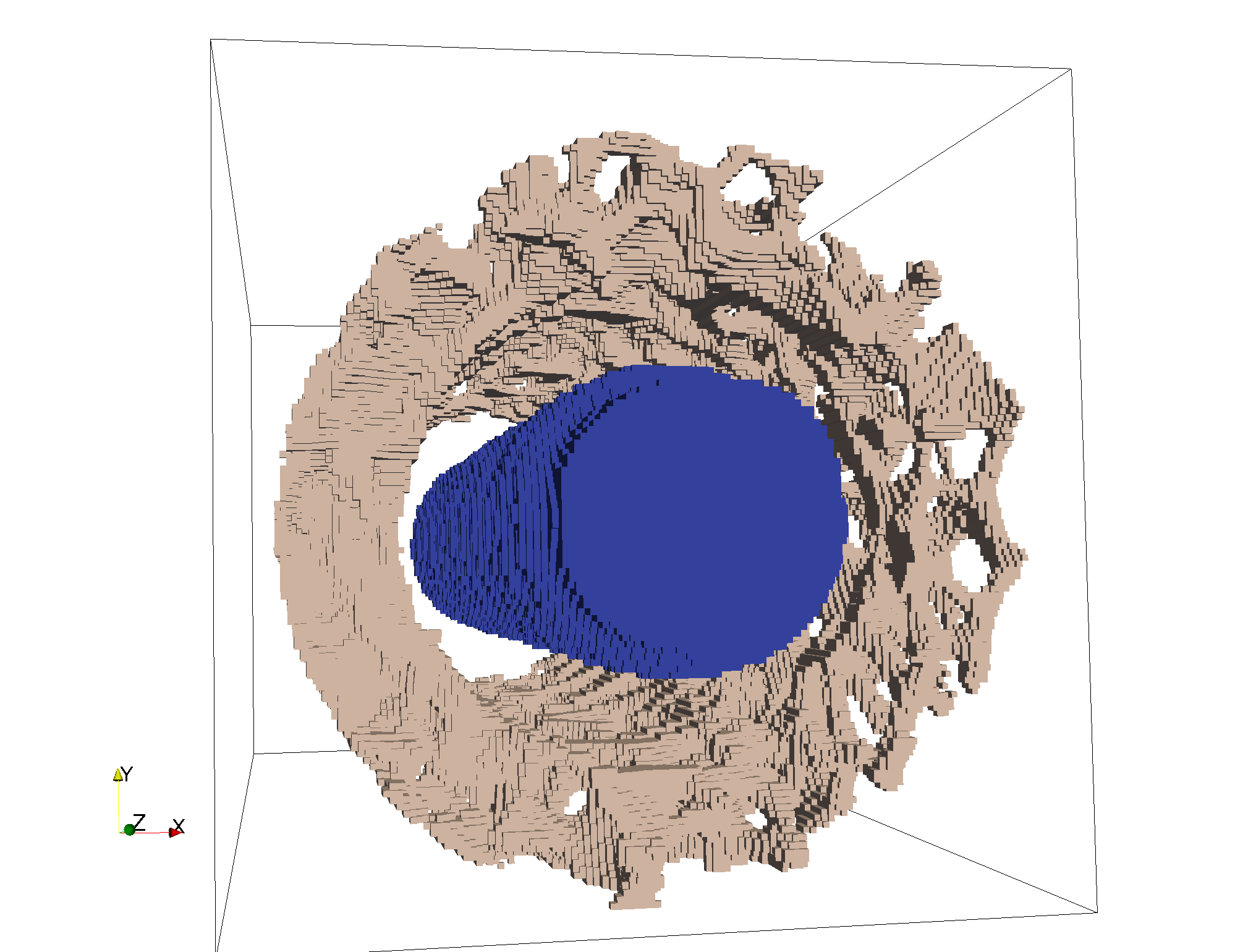

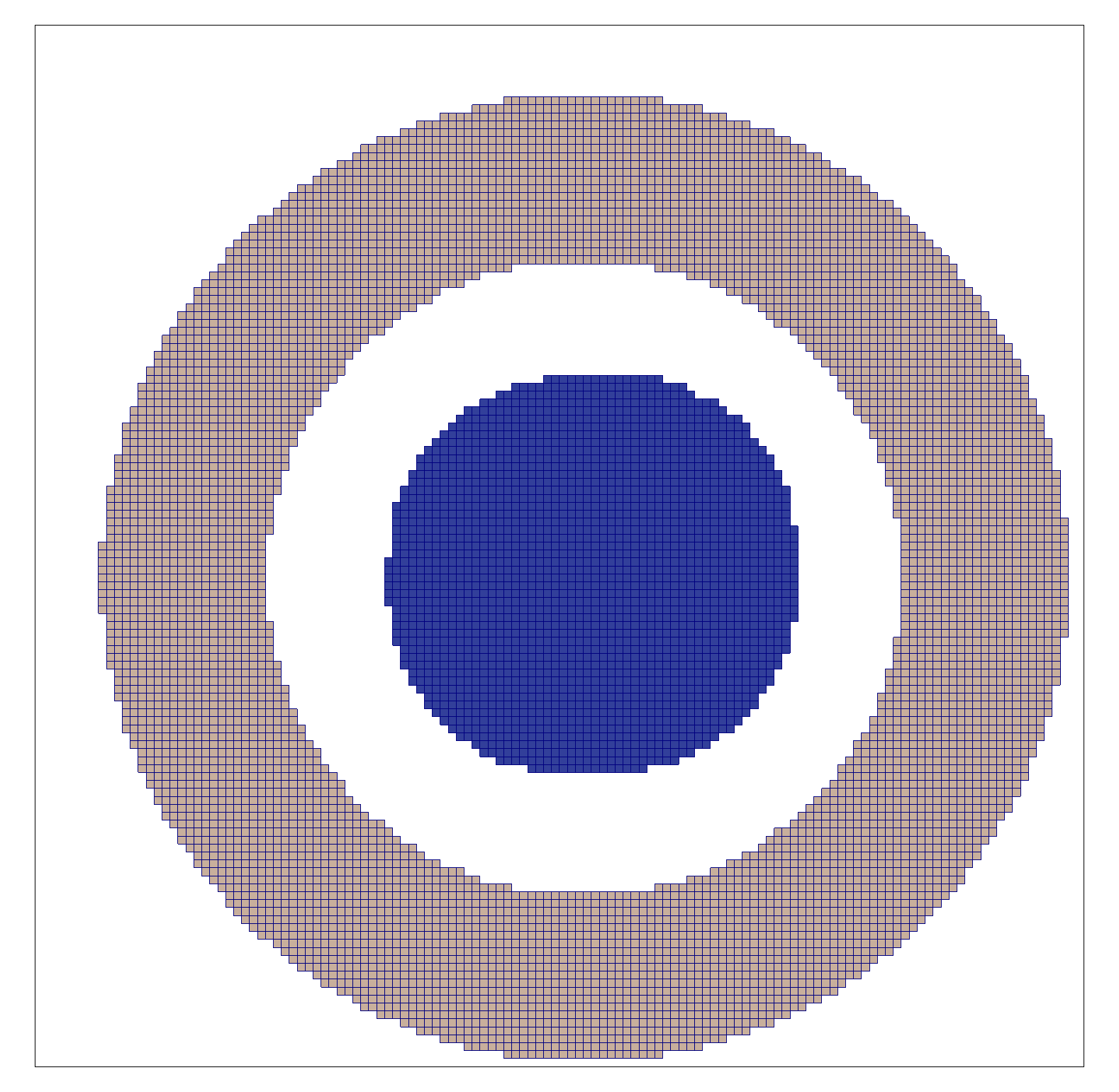

2.3.1. Uniaxial test

A uniaxial test is a compression (or tension) test with a fixed displacement or force applied along the z axis. Two boundary conditions are applied:

-

Nodes on the bottom surface are fixed in the z direction, but free in the x and y directions.

-

Nodes on the top surface are subject to a fixed displacement in the z direction. No constraints are applied to the x and y directions.

A uniaxial test is shown in Figure 5, along with other types of compression tests. The coordinate system shown in the figure is the Test Frame. A uniaxial test is distinguished from an axial test (described below) by the fact that nodes on the top and bottom surfaces are unconstrained laterally. This corresponds to zero contact friction.

The amount of displacement applied to the top surface can be specified by either the normal_strain or displacement parameters.

|

|

Uniaxial models are under-constrained since, as defined, arbitrary lateral motion, and arbitrary rotation about the z axis of the entire model are permitted. This results in a singular system of equations. A "pin" may be added to prevent these motions. See pin parameter. However, Faim will find a (non-unique) solution even without a pin, and in fact typically does so faster in the absence of a pin. A pin should always be added if you want to use an algebraic solver, or if you intend to compare the absolute positions of multiple solved models. Alternatively, you can register the solved models before comparing absolute positions. |

2.3.2. Axial test

An axial test is similar to a uniaxial test, with the additional constraint that nodes on the top and bottom surfaces are also laterally fixed (i.e. no movement of these nodes is permitted in the x-y directions). This corresponds to 100% contact friction.

An axial test is shown in Figure 5.

2.3.3. Confined test

A confined test is similar to an axial test, with the addition that nodes on the side surfaces of the image volume are constrained laterally (i.e. no movement of these nodes is permitted in the x-y directions).

A confined test is shown in Figure 5.

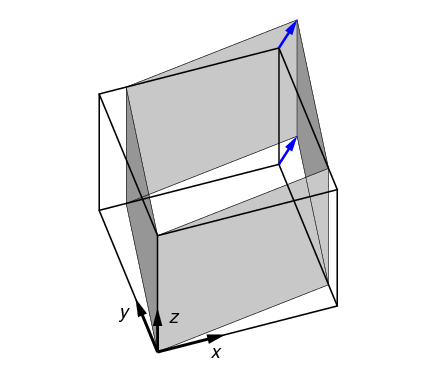

2.3.4. Symmetric shear test (symshear)

In a symmetric shear test, the side surfaces are angularly displaced to correspond to a given shear strain. This is shown in Figure 6. The only parameter of relevance to a symmetric shear test is shear_strain.

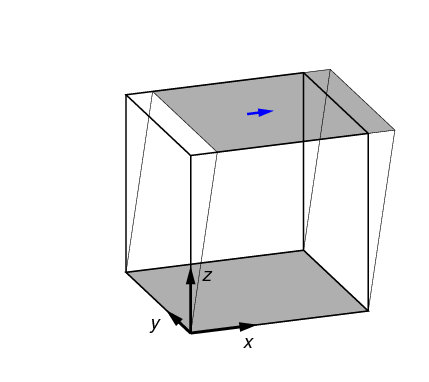

2.3.5. Directional shear test (dshear)

In a directional shear test, the top surface is displaced laterally, as shown in

Figure 7. The direction and degree of displacement are

set by the parameter shear_vector,

which gives the x,y shear displacement in the Test Frame.

Typically shear_vector is unitless, and the actual displacement

is the shear vector scaled by the vertical height of the model (i.e.

the image extent in the z direction in the Test Frame).

If however scale_shear_to_height

is set to off, then shear_vector is taken to have absolute units of

length.

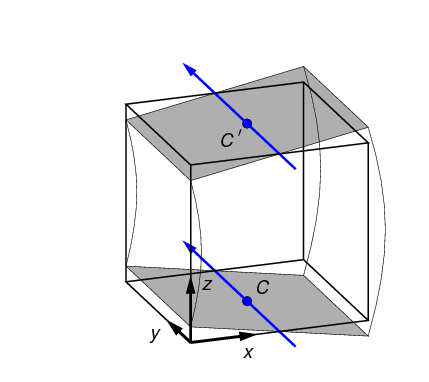

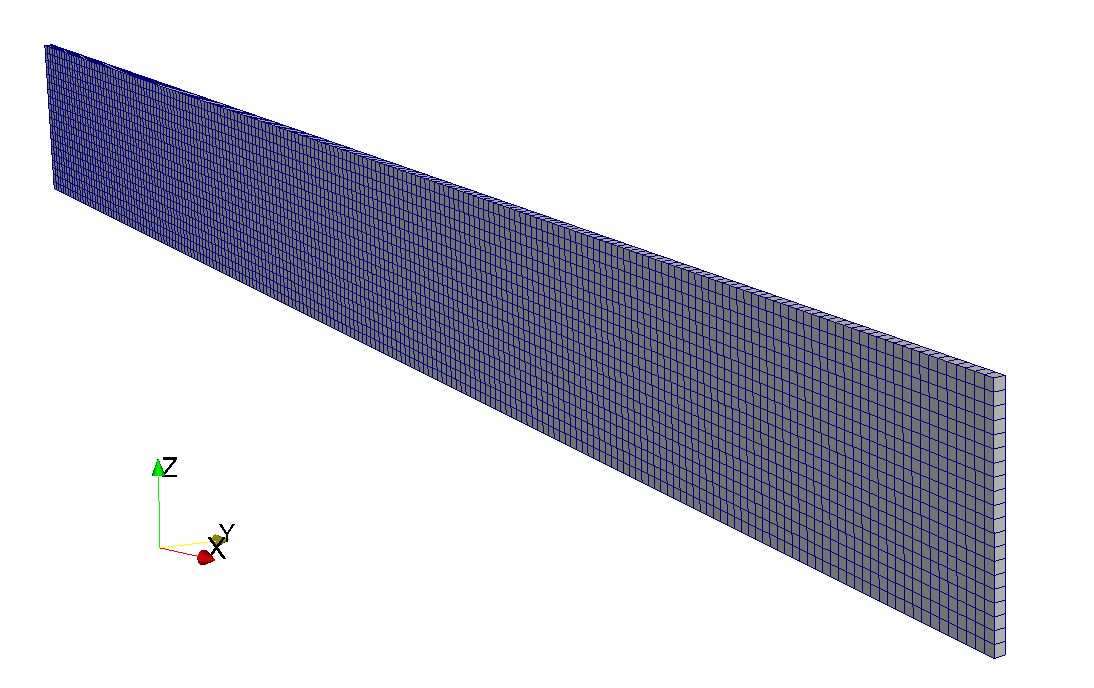

2.3.6. Bending test

In a bending test, the top and bottom surfaces are rotated in opposite

directions, as shown in Figure 8. The rotation axes are defined by

a neutral axis, given by a point in the x,y plane (shown as C) and an

angle in the x,y plane. (The coordinate system shown in

the figure is the Test Frame.) The neutral axis, projected onto the top and

bottom surfaces, is indicated in

blue in Figure 8.

The point and angle defining the neutral axis can be set in

n88modelgenerator with the parameters

central_axis and

neutral_axis_angle respectively.

The central axis (point C) can be specified as a numerical x,y pair, or as the

center of mass or the center of the data bounds; center of mass is the default setting.

The default angle of the neutral axis is 90º, parallel to the y-axis.

The amount of rotation can be specified with the parameter bending_angle. The top and the bottom

surfaces are each rotated by half of this value. Positive rotation is defined as in Figure 8.

In a bending test, the top and bottom surfaces are laterally constrained; no x,y motion of the nodes on these surfaces is permitted.

2.3.7. Torsion test

In a torsion test, the top surface is rotated about the center axis, as shown in Figure 9. The angle of rotation is given by twist_angle. In the figure positive rotation is shown. The central axis lies parallel to the z axis in the test frame; its position can be specified with the parameter central_axis. The default position of the central axis is passing through the center of mass.

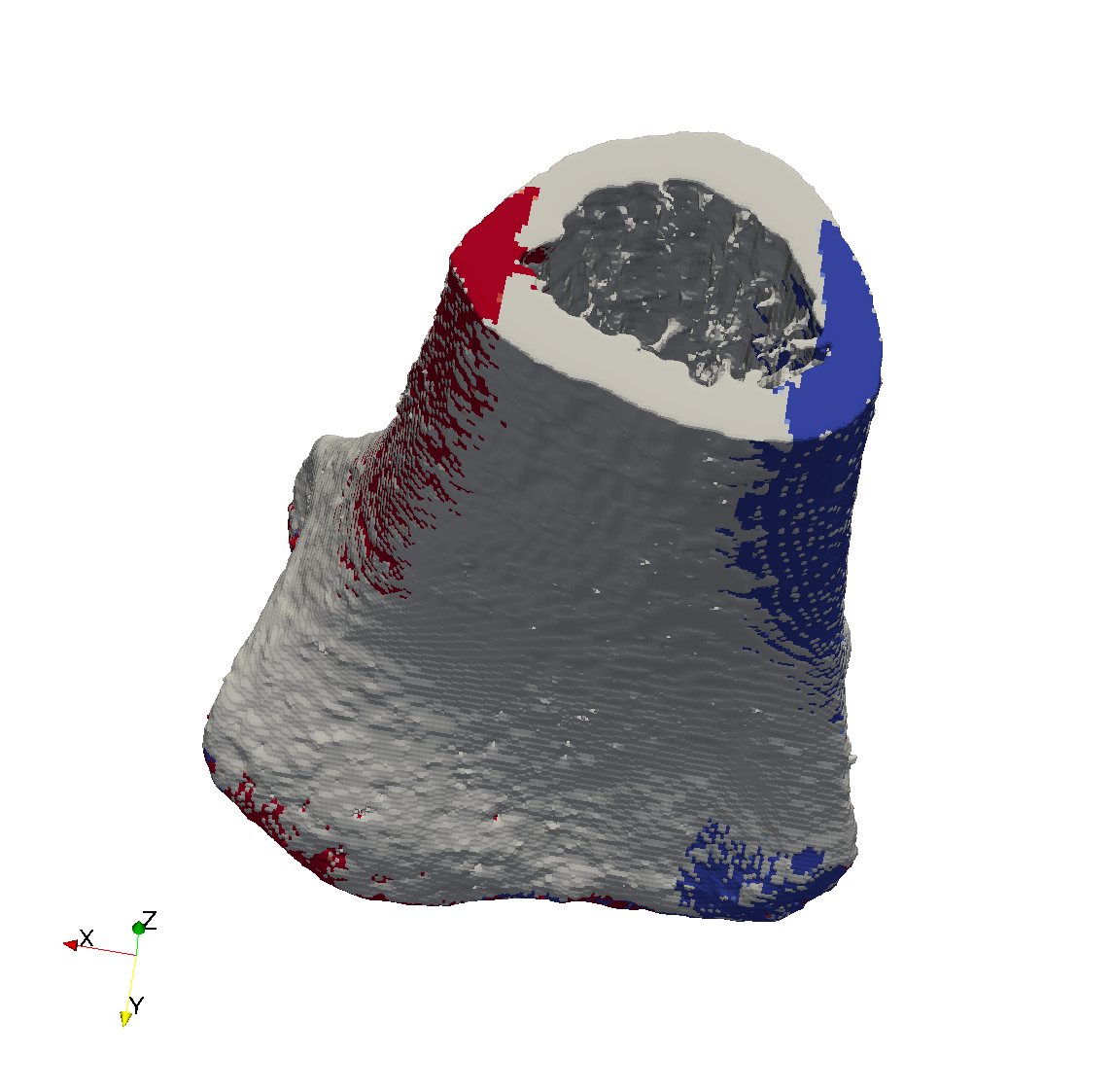

2.4. Uneven surfaces

The grey planes in Figure 5 represent the top and bottom boundary surfaces of the image volume. The actual surface to which boundary conditions are applied is by default that part of your object which passes through these boundaries. If your object does not intersect with the top and bottom boundaries of the image volume, then no boundary conditions can be applied using this method.

An alternative is to make use of the parameters top_surface and bottom_surface. The “uneven surface” near a boundary is defined as the surface visible from that boundary (viewed from an infinite distance). Visibility is evaluated using ray tracing. Thus for a porous material such as bone the “uneven surface” consists of nodes on the top visible surface, not including nodes on surfaces down inside the pores.

The visible setting can be used for any type of test except symshear. For example, if used with a

bending test, the boundary conditions are no longer exactly tilted planes as shown

in figure Figure 8; the displacement of each node on the visible surfaces is still

calculated as described from its x,y coordinates, but the displaced nodes

no longer lie all on a plane (because the initial positions are not on a plane.)

Because the visibility test may find some surfaces far from the boundary in question, it should usually be used together with the parameters bottom_surface_maximum_depth and/or top_surface_maximum_depth. These provide a limit, as a distance from the boundary, for the search for the uneven surface.

2.5. Material specification

Material specification consists of two parts:

- Material Definition creation

-

One or more material definitions are created. A material definition is a mathematical description of material mechanical properties. It has a definite type (e.g. linear isotropic or linear orthotropic) and specific numerical values relevant to that type (e.g. a linear isotropic material definition will have particular values of Young’s modulus and Poisson’s ratio).

- Material Table generation

-

The material table maps material IDs, as assigned during segmentation, to material definitions. It is possible to combine different types of materials (e.g. isotropic and orthotropic) in the same material table. A single material definition can be assigned to multiple material IDs.

In Numerics88, material definitions are indexed by name. This is not usually

evident in

n88modelgenerator, as for basic usage, the names are generated automatically,

and are not needed by the user. However for advanced material specification,

this becomes important, as we will see below when material definition

files are discussed.

2.5.1. Elastic material properties

Linear elastic materials obey Hooke’s Law,

where εxx,εyy,εzz are the engineering normal strains along the axis directions, γyz,γzx,γxy are the engineering shear strains, and σij are the corresponding stresses. S is the compliance matrix. The inverse of S gives σ in terms of ε, and is the stress-strain matrix C, also sometimes called the stiffness matrix.

An isotropic material has the same mechanical properties in every direction. Elastic isotropic materials are characterized by only two independent parameters: Young’s modulus (E) and Poisson’s ratio (ν). The compliance matrix is

For n88modelgenerator the isotropic elastic parameters are set with youngs_modulus and poissons_ratio. The default values are 6829 MPa and 0.3, as reported by MacNeil and Boyd (2008).

An isotropic material definition is the default, and will be assumed if no other material type is specified.

Orthotropic elastic materials have two or three mutually orthogonal twofold axes of symmetry. The compliance matrix is

where

| Ei |

is the Young’s modulus along axis i , |

| νij |

is the Poisson’s ratio that corresponds to a contraction in direction j when an extension is applied in direction i , |

| Gij |

is the shear modulus in direction j on the plane whose normal is in direction i . It is also called the modulus of rigidity. |

Note that the νij are not all independent. The compliance matrix must be symmetric, hence

Therefore only 9 independent parameters are required to fully specify an orthotropic material.

For n88modelgenerator the orthotropic elastic parameters are set with orthotropic_parameters. Whenever this parameter is specified, an orthotropic material definition will be used.

|

|

An orthotropic material defined with a compliance matrix as given above necessarily has its axes of symmetry aligned with the coordinate axes. In contrast, an orthotropic material which is rotated relative to the coordinate axes has a more general form of compliance matrix. In Numerics88 software, such a non-aligned orthotropic material must be specified as an anisotropic material. A rotation matrix relates elements of the anisotropic compliance matrix to the orthotropic compliance matrix. If you want the mathematical details, please contact us! |

The most general form of an elastic material is anisotropic, for which

we allow the stress-strain matrix, or the compliance matrix, to have arbitrary values.

The stress-strain matrix is symmetric, therefore an anisotropic material requires

21 parameters to define.

To define an anisotropic material with n88modelgenerator, it

is necessary to use a

material definitions file. See below.

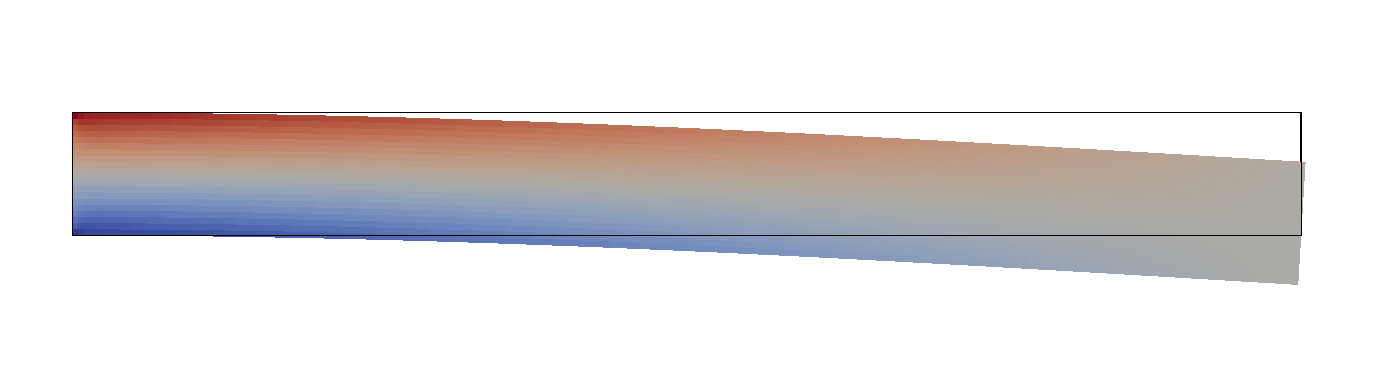

2.5.2. Plasticity

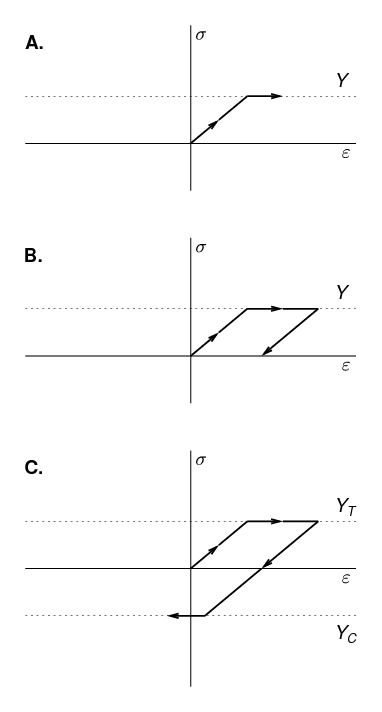

Elastoplastic material behaviour is an idealisation of a particular kind of nonlinear stress-strain relationship in which we divide the stress-strain relationship into two clearly distinguished regions: (1) an elastic region, which is identical to the linear elastic material behaviour described above, and, (2) a purely plastic region. For a material subject to a uniaxial deformation, this is sketched in Figure 10. In Figure 10A, we see the initial elastic region, which in this sketch is tensile, with slope equal to the Young’s modulus. This region continues until the stress reaches the yield strength, Y. For further increases in strain beyond this point, the stress ceases to increase, and remains constant at the yield strength. The incremental strain in this region is referred to as the plastic strain. Although we must perform work to produce a plastic strain, the plastic strain is irreversible, as can be seen in Figure 10B. In B, after applying a large plastic strain, the strain is gradually reduced. The plastic strain component is unchanged during the relaxation; instead, it is the elastic component of the strain that is reduced. As the stress depends entirely on the elastic component of the strain, the stress also immediately begins to decrease. Hence, we do not travel back along the “outward” path, but instead return along a shifted version of the linear elastic region: the material exhibits hysteresis. Although the initial point and the final point of Figure 10B are both characterised by zero strain, the state is quite different, as the latter state has a large plastic strain. If we continue to reduce the strain, as in Figure 10C, the stress becomes negative - a compressive stress - until, once again, we reach the yield strength limit. Note that the yield strength in tension and the yield strength in compression are not necessarily equal.

In three dimensions, an elastoplastic material is characterised by a yield surface, which is the boundary of the elastic region. The yield surface is defined in stress space (or equivalently in strain space). For isotropic materials, the yield surface is most easily defined in the space of the three principal stresses. Stresses lying inside the yield surface are associated with an elastic state. When the stress reaches the yield surface, the material yields, and further increases in strain result in the stress state moving along the yield surface. Many different shapes of yield surface are possible, and are characteristic of different kinds of materials. Yield surfaces are conveniently defined mathematically by a yield function f, such that the yield surface is the locus of points for which f = 0. A complete explanation of elastoplastic behaviour in three dimensions is not given here. We recommend that you consult a good mechanics of materials textbook.

To specify an elastoplastic material, it is necessary both to specify its elastic

properties, and its plasticity. Currently Faim only allows plasticity to be defined with

isotropic elastic properties. A typical specification of an elastoplastic model using

n88modelgenerator will look something like this

n88modelgenerator --youngs_modulus=6829 --poissons_ratio=0.3 --plasticity=vonmises,50 mydata.aim

The currently supported plastic yield criteria are described below.

The von Mises yield criterion states that yielding begins when the distortional strain-energy density attains a certain limit. It is therefore also called the distortional energy density criterion. The distortional strain-energy density is the difference between the total strain energy density and the strain energy density arising only from the part of the strain resulting in a volume change. Hence, the distortional strain-energy density is associated with that part of the strain that results in no volume change: or, in other words, the part of the strain that causes a change in shape.

The yield function for a von Mises material, in terms of the three components of the principal stress, is given by

The parameter Y is the yield strength in uniaxial tension (or compression).

To specify a von Mises material in n88modelgenerator, use the parameter

plasticity. For example,

--plasticity=vonmises,68 will define a von Mises material with Y = 68.

The units of Y are the same as stress, so typically MPa.

The Mohr-Coulomb yield criterion is a refinement of the Tresca yield condition, which states that yielding begins when the shear stress at a point exceeds some limit. To this, the Mohr-Coulomb criterion adds a dependence on the hydrostatic stress, such that the shear stress limit is dependent on the hydrostatic stress, in particular that the shear stress limit increases with hydrostatic stress. The Mohr-Coulomb yield criterion is characterised by two values: the cohesion c and the angle of internal friction φ. For principal stresses in the order σ1 > σ2 > σ3, the Mohr-Coulomb yield function is

Under uniaxial tension, the yield strength is

while under uniaxial compression, the yield strength is

Mohr-Coulomb materials are specified to n88modelgenerator using these two

yield strengths. See plasticity. For example,

--plasticity=mohrcoulomb,40,80 will define a Mohr-Coulomb material with YT = 40

and YC = 80. The units of YT and YC are the same as stress, so typically MPa.

2.5.3. Material table generation

The parameter material_table allows one of two standard types of material table may be chosen: a simple table, consisting of a single material definition, or a Homminga material table, which relates density to stiffness.

If you require a material table different than either of the standard ones offered, you can use a material definitions file. See below for details.

The simplest material table consists of a single material definition. Thus it corresponds to homogeneous material properties: all elements are assigned the same material properties.

Note that although only a single material definition is created, it is nevertheless

still possible for the material table to have multiple entries corresponding to

multiple material IDs. In this case every material ID in the table maps of course to

the same single material definition. n88modelgenerator automates this: it examines

the segmented input image, and generates one entry in the material table for every

unique material ID (i.e. image value) present in the segmented image. Clearly, as a

matter of efficiency and simplicity, if you intend to use an image for analysis with

homogenous material properties, it is preferable to segment the image object to a

single material ID. It is not however, necessary to do so.

Homminga (2011) introduced a model that attempts to account for varying bone strength with density. In this model, the modulii vary according to the equation

Where ρ is the CT image density. For the orthotropic case, the shear modulii follow the same scaling, and for the anisotropic case, the stress-strain matrix follows this scaling.

To use this type of material table, the data must be segmented such that the material ID of each voxel is proportional to its CT image density. As material IDs are discrete, the CT image density is therefore binned. Hence

IDmax is set with the parameter homminga_maximum_material_id, and the exponent is set with the parameter homminga_modulus_exponent.

Emax will depend on the specified material. For an isotropic material, it will be given by youngs_modulus. However orthotropic or anisotropic materials may also be scaled: the entire stress-strain matrix scales according to the above-stated law.

|

|

In contrast to the homogenous material table, a Homminga material table is not

guaranteed a priori to have an entry for every material ID present in the input

segmented image. If your segmented image has material IDs larger than the specified

IDmax , n88modelgenerator will produce an error.

|

2.5.4. Material definitions file

The method presented up to this point for defining materials in

n88modelgenerator, while relatively easy, suffers from some limitations.

In particular, only a single material can be specified using the

options discussed so far. Furthermore, an anisotropic material can not

be specified at all using the command line parameters. n88modelgenerator

therefore provides an alternate method of defining materials, which is via

a material definitions file. A material definitions file can be used to

define any material known to Faim, any number of materials, and

any material table. A material definitions file can be specified with

the argument material_definitions.

The material definitions file format is straight-forward, as you can see from this example.

MaterialDefinitions:

CorticalBone:

Type: LinearIsotropic

E: 6829

nu: 0.3

TrabecularBone:

Type: LinearIsotropic

E: 7000

nu: 0.29

MaterialTable:

100: TrabecularBone

127: CorticalBone

Here we have a material definitions file containing two materials, both of which are linear elastic materials, but with different numerical values. Besides defining the materials, we have to specify the material table, which maps materials IDs (ie. the values present in the input image) to the materials we have defined.

|

|

The indenting in material definitions file is important, as it indicates how objects are grouped. Indenting must be done with spaces, and not with tabs. Technically, the material definitions file is in YAML format. Thus any valid YAML syntax can be used. In particular, it is possible to use curly brackets instead of indenting to indicate nesting, should you so wish. |

Here is another example material definitions file, which defines an anisotropic material.

MaterialDefinitions:

ExampleAnisoMat:

Type: LinearAnisotropic

StressStrainMatrix: [

1571.653, 540.033, 513.822, 7.53 , -121.22 , -57.959,

540.033, 2029.046, 469.974, 78.591, -53.69 , -50.673,

513.822, 469.974, 1803.998, 20.377, -57.014, -15.761,

7.53 , 78.591, 20.377, 734.405, -23.127, -36.557,

-121.22 , -53.69 , -57.014, -23.127, 627.396, 13.969,

-57.959, -50.673, -15.761, -36.557, 13.969, 745.749]

MaterialTable:

1: ExampleAnisoMat

The possible materials, and the values that must be specified for each, are listed in the following table.

| Type | Variable | Description |

|---|---|---|

LinearIsotropic |

|

Young’s modulus |

|

Poisson’s ratio |

|

LinearOrthotropic |

|

Young’s modulii as [Exx,Eyy,Ezz] |

|

Poisson’s ratios as [νyz,νzx,νxy] |

|

|

Shear modulii as [Gyz,Gzx,Gxy] |

|

LinearAnisotropic |

|

The 36 elements of the stress-strain matrix. Since the stress-strain matrix is symmetric, there are only 21 unique values, but all 36 must be given. They must be specified in the order K11 , K12 , K13, . . . K66 , but since the matrix is symmetric, this is equivalent to K11 , K21 , K31, . . . K66. |

VonMisesIsotropic |

|

Young’s modulus |

|

Poisson’s ratio |

|

|

Yield strength |

|

MohrCoulombIsotropic |

|

Young’s modulus |

|

Poisson’s ratio |

|

|

Mohr Coulomb c parameter (cohesion). |

|

|

Mohr Coulomb φ parameter (friction angle). |

|

|

Materials are stored in the n88model file using exactly the same

structure and variable names. See the

MaterialDefinitions group of

the n88model file specification.

|

3. Preparing Finite Element Models with vtkbone

You only need to read this chapter if you are interested in

writing scripts to create your own custom model types. For many users,

n88modelgenerator, as described in the previous chapter, will be sufficient.

Although n88modelgenerator can generate

many useful models for FE analysis, at times additional flexibility is

required. In order to provide as much flexibility to

the user as possible, we provide the option for users to perform custom

model generation. This functionality is provided by vtkbone toolkit,

which is built on

VTK . VTK is "an open-source, freely available software system

for 3D computer graphics, image processing and visualization", produced

by Kitware. VTK provides a great deal of functionality

to manipulate and visualize data that is useful for finite

element model generation and analysis. vtkbone is a collection of custom VTK

classes that extend VTK to add finite element pre-processing functionality.

The vtkbone library objects combined with generic VTK objects can be

quickly assembled into pipelines to produce nearly infinite customizability

for finite element model generation and post-processing analysis.

Basing vtkbone on VTK provides the following advantages.

- Large existing library of objects and filters

-

VTK is a large and well established system, with many useful algorithms already packaged as VTK objects.

- Built-in visualization

-

VTK was designed for rendering medical data. With it sophisticated visualizations are readily obtained. The visualization application ParaView is built with VTK and provides excellent complex data visualization.

vtkbonefunctionality is easily incorporated into ParaView. - Standardization

-

The time and effort the user invests in learning

vtkboneprovides familiarity with the widely-used VTK system, and is not limited in application to Numerics88 software. - Extensibility

-

The advanced user is able to write their own VTK classes where required.

- Multiple Language Support

-

VTK itself consists of C++ classes, but provides wrapping functionality to generate an interface layer to other languages.

vtkboneuses this wrapping functionality to provide a Python interface.

The best way to learn to use vtkbone is to follow the tutorials

in Tutorials.

We recommend that you use Python for assembling programs with vtkbone.

There are several advantages of using Python, including simplicity of

syntax and automatic memory management. In our experience,

writing programs using vtkbone in Python is far less time consuming, and

results in programs that are much easier to debug than writing the same

programs in C++ . This is particularly true for users without

significant programming experience.

|

|

Many of the tools provided with Faim are implemented as Python scripts.

Some use vtkbone, and some manipulate the n88model file directly through

the

python netCDF4 module, which is

installed automatically when you install n88tools. Examining

how these scripts work can be a good way to learn how to write your own custom

python scripts for manipulating Faim models.

|

3.1. vtkbone API documentation

The complete Application Programming Interface (API) reference documentation

for vtkbone is available at http://numerics88.com/documentation/vtkbone/1.0/ .

Class names in this document are linked to the vtkbone API documentation, so

that if you click on a class name, it should open

the documentation for that class. You should refer to the vtkbone API reference

documentation whenever using a vtkbone class, as the descriptions below are

introductory, and do not exhaustively cover all the functionality.

API documentation for VTK can be found at http://www.vtk.org/doc/release/6.3/html/ . Or you can get the latest version at https://vtk.org.download/ .

|

|

If you are using Python instead of C (and for most purposes, we

recommend that you do), then you will have to infer the Python interface

from the C interface. This is generally simple: class and method

names are the same, as are all positional argument names. Since Python is a

weakly-typed language, you do not need to be concerned generally with

the declared type of the arguments. A small number of C++ methods in

vtkbone do not have a Python equivalent, but this is rare.

|

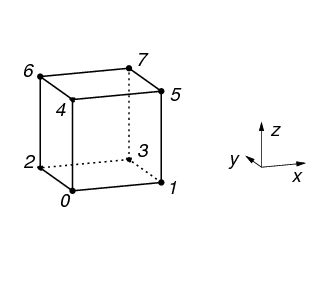

3.2. Array indexing in vtkbone

Arrays in vtkbone, as in VTK, C and Python, are zero-indexed. That is, the

first element is element 0. This applies also to node numbers and element numbers.

(It does not however apply to material IDs, because the material ID is not an

array index; it is a key into a table look-up.) Be careful if reading an n88model file directly, because node numbers and element numbers are stored

1-indexed (see the file specification). The translation is done automatically

if you use the vtkbone objects vtkboneN88ModelReader and

vtkboneN88ModelWriter .

3.3. Typical workflow for vtkbone

Figure 11 shows a typical work flow for creating a

finite element model using vtkbone. VTK generally consists of two

types of objects (or “classes”): objects that contain data, and objects that

process data. The latter are called “filters”. Filters can be chained

together to create a processing pipeline. In the figure, filters are

listed in the boxes with dark black lines and rounded corners; data

objects are listed in gray rectangles. The boxes with dark black lines and

rounded corners represent actions, and may list one or more VTK or

vtkbone objects that are suitable for performing the action.

The typical way to use a VTK filter object is to create it, set

parameters, set the input (if relevant), call Update(), and then get the

output. For example, for a vtkboneAIMReader object, which has an

output but no input, we might do this in Python

reader = vtkbone.vtkboneAIMReader()

reader.SetFileName ("MYFILE.AIM")

reader.Update()

image = reader.GetOutput()

|

|

There is an alternate way to use VTK filter objects, and that is to

chain them together in a pipeline. In this case, calling Update

on the last item in the pipeline will cause an update of all the

items in the pipeline, if required. To connect filters in a

processing pipeline, use reader = vtkbone.vtkboneAIMReader()

reader.SetFileName ("MYFILE.AIM")

mesher = vtkbone.vtkboneImageToMesh()

mesher.SetInputConnection (reader.GetOutputPort())

mesher.Update()

This automatically calls Update on the reader.

Generally, unless you have some application where the pipeline approach

is advantageous, we recommend the simpler method of using |

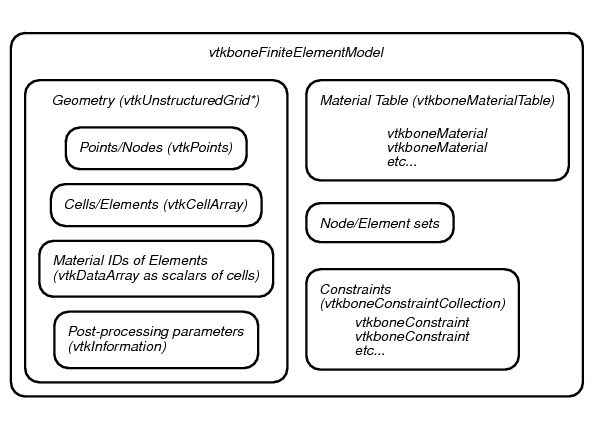

3.4. The vtkboneFiniteElementModel object

The central class that vtkbone adds to VTK is a new type of data class, called

vtkboneFiniteElementModel . The conceptual structure of vtkboneFiniteElementModel

is shown in Figure 12.

vtkboneFiniteElementModel is a subclass of

the VTK class vtkUnstructuredGrid , which represents a data set as consisting of an

assortment of geometric shapes. In VTK terminology, these individual shapes

are “Cells”. They map naturally to the concept of Elements in finite element

analysis. The vertices of the cells, in VTK terminology, are “Points”. These

are equivalent to Nodes in finite element analysis. vtkUnstructuredGrid , like other VTK

objects, is capable of storing specific types of additional information, such

as one or more scalar or vector values associated with each Cell or Point.

This aspect is used for storing, for example, material IDs, which are mapped

to the scalar values of the Cells. To

vtkUnstructuredGrid , vtkboneFiniteElementModel adds some additional

information related to requirements for finite element analysis such as material properties and

constraints (i.e. displacement boundary conditions and applied loads), as well as

sets of Points and/or Cells, which are useful for defining constraints and

in post-processing. Sets and constraints are indexed by an assigned name, which is convenient

for accessing and modifying them.

3.5. Reading a segmented image

We typically read in data as a segmented 3D volumetric image from micro-CT. The image values (i.e. the pixel or voxel values) need to correspond to the segmented material IDs. The selection of a file reading object will be appropriate to the input file format. The output of the file reader filter will be a vtkImageData object.

We have already given the example of using a vtkboneAIMReader to read a Scanco AIM file, which was

reader = vtkbone.vtkboneAIMReader()

reader.SetFileName ("MYFILE.AIM")

reader.Update()

image = reader.GetOutput()

|

|

One slightly obscure technical issue which may arise is: are the data

of a vtkImageData associated with the Points or the Cells? Either is possible in

VTK. The scalar image data (scalar because there is one value per location)

must be explicitly associated either with the Cells or with the Points.

(In fact it’s possible to have different scalar data on the Cells and the

Points.) Thinking about it as an FE problem, it is natural to associate

the image data with Cells, where each image voxel becomes an FE element. Thus,

we like to think of each value in the image as being at the center of

a Cell in the VTK scheme. VTK however has generally standardized

on the convention of image data being on the Points. There are some important

consequences. For example, a vtkImageData that has dimensions of

(M,N,P) has (M,N,P) Points, but only (M-1,N-1,P-1) Cells. Or conversely, if you

have data with dimensions of (R,S,T), and you

want to put the data on the Cells, then you actually require a vtkImageData

with dimensions of (R+1,S+1,T+1). Also, if the origin is important, one

needs to keep in mind that the origin is the location of the zero-indexed

Point. In terms of the Cells, this is the “lower-left” corner of the

zero-indexed Cell, not the center of that Cell. vtkbone objects that

require images as input will accept input with data either on the points

or on the Cells. However, many VTK objects that process

images work correctly only with input data on the Points. By contrast, if you

want to render the data and color according to the scalar image, the rendering

is quite different depending on whether the scalar data is on the Points or the Cells.

Generally, you get the expected result in this case by putting the data on the

Cells. This discussion is important because it illustrates that there is no

one universally correct answer to whether image data belongs on

the Points or on the Cells. vtkbone image readers, such as

vtkboneAIMReader have an option DataOnCells to control the desired

behaviour. VTK image readers always put image data on the Points, so if

you need the data on the Cells, you must to copy the output to a new vtkImageData .

|

Examples of reading data with vtkbone are given in the tutorials Compressing a cube revisited using vtkbone and Advanced custom model tutorial: a screw pull-out test.

3.6. Reading an unsegmented image and segmenting it

If you have an unsegmented image such as a raw micro-CT image that

has scalar values still in terms of density, it is possible to process the image with

VTK. Segmentation is a complex subject, which we are unable to cover

here. For an

example of a simple segmentation, where only a density threshold

and connectivity are considered, consult the example in the directory

examples/segment_dicom.

3.7. Ensuring connectivity

A well-defined problem for finite element analysis requires that all parts of the object in the image be connected as a single object.

Disconnected parts in the input create an ill-defined problem, with

an infinite number of solutions. These arise, for example, when there

is noise in the image data. Some solvers cannot solve this kind of problem

at all (the global stiffness matrix is singular). n88solver will typically

find a solution. However, the convergence might be very slow as it attempts

to find the non-existent optimum position for the disconnected

part.

To avoid this problem, the input image can be processed with the filter ImageConnectivityFilter . This filter has many options and different modes of operation, but in most cases the default mode is sufficient. In the default behaviour:

-

Zero-valued voxels are considered “empty space”, and no connection is possible though them.

-

Voxels are considered connected if they share a face. Sharing only a corner or an edge is not sufficient.

-

Only the largest connected object in the input image is passed to the output image. Smaller objects that have no continuous path of connection to the largest connected object are zeroed-out.

Because of the first point, it is clearly necessary to segment the image before applying ImageConnectivityFilter , because empty space must be labelled with the value 0.

connectivity_filter = vtkbone.vtkboneImageConnectivityFilter() connectivity_filter.SetInput (image) connectivity_filter.Update() image = connectivity_filter.GetOutput()

Notice that we use the variable name image for both the input

and the output. This causes no problems in Python, although by doing this we can no longer

use this to refer to the input image as the second call to image reassigns

the variable the value of connectivity_filter.GetOutput(). If it were necessary to refer

to the input image, then we should use different names for the input and output. |

If your input image is processed and segmented with another software package, it is still a good idea to verify the connectivity of the input image. If your input image is carefully prepared, and you don’t want to inadvertently modify it, you can check for connectivity without modifying it like this:

connectivity_mapper = vtkbone.vtkboneImageConnectivityMap()

connectivity_mapper.SetInput (image)

connectivity_mapper.Update()

if connectivity_mapper.GetNumberOfRegions() != 1:

print "WARNING: Input image does not consist of a single connected object."

Examples of ensuring connectivity are given in the tutorials Compressing a cube revisited using vtkbone and Advanced custom model tutorial: a screw pull-out test.

3.8. Generating a mesh

Once we have a segmented and connected image, we convert the image to a mesh of elements, represented by

a vtkUnstructuredGrid object. The base class vtkUnstructuredGrid supports many

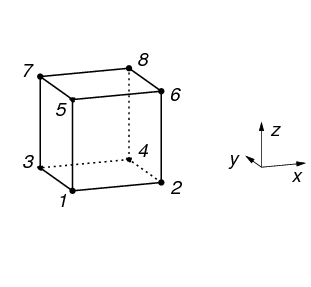

types of Cells, but currently vtkbone supports only VTK_HEXAHEDRON and VTK_VOXEL. The topology of VTK_VOXEL is shown in Figure 13.

Generating a mesh in vtkbone is straightforward; simply pass the input image

to a vtkboneImageToMesh filter. It takes no options; the important choices

were made already during the segmentation stage. In Python this looks

like this

mesher = vtkbone.vtkboneImageToMesh() mesher.SetInput (image) mesher.Update() mesh = mesher.GetOutput()

vtkboneImageToMesh creates one Cell (i.e. element) of type VTK_VOXEL for every

voxel in the input image that has non-zero scalar value. The scalar values of

the image become the scalar values of the output Cells. It also creates Points

at the vertices (corners) of the Cells. There are no duplicate Points:

neighbouring Cells will reference the same Point on shared vertices.

|

|

Cells and Points in VTK are ordered; they can be indexed by

consecutive Cell or Point number. This ordering is not inconsequential.

In Faim, the solver efficiency is optimal with certain orderings, and in fact

there are orderings for which the solver fails with an error message.

The ordering output by vtkboneImageToMesh is

“x fastest, z slowest”. It is recommended to always preserve this ordering.

|

3.9. Defining materials

Material definitions are generated by creating a instance of a class of the

corresponding material type, and then calling the appropriate methods to set

the material parameters. Every material must have a unique name. vtkbone will

assign default unique names when defining a new material object, but

the names will be more meaningful if you assign them yourself.

vtkbone version 9 supports the following material classes:

Plus special material classes that are in fact material arrays, which are discussed below.

Here is an example of creating a simple linear isotropic material:

linear_material = vtkbone.vtkboneLinearIsotropicMaterial() linear_material.SetYoungsModulus (800)linear_material.SetPoissonsRatio (0.33) linear_material.SetName ("Linear 800")

| As discussed in A note about units, the units of Young’s modulus depend on the input length units. If the length units are mm and force units in N, then Young’s modulus has units of MPa. |

This is the construction of a Mohr-Coulomb elastoplastic material:

ep_material = vtkbone.vtkboneMohrCoulombIsotropicMaterial() ep_material.SetYoungsModulus (800) ep_material.SetPoissonsRatio (0.33) ep_material.SetYieldStrengths (20, 40)ep_material.SetName ("Mohr Coulomb material 1")

| For Mohr-Coulomb, this pair of numbers is the yield strength in tension and the yield strength in compression. The units of yield strength are the same as stress, so typically MPa. |

In addition to the standard material classes, there are material array classes. Simply put, these define not a single material, but a sequence of similar materials with variable parameters. For a discussion of the circumstances in which this could be useful, see Efficient Handling of Large Numbers of Material Definitions. The material array classes do inherit themselves from vtkboneMaterial (rather than being a collection of vtkboneMaterial objects). They can therefore be used nearly anywhere that a regular material can be; in particular, they can be inserted as entries into a material table. The material array classes are

3.10. Constructing a material table

Once you have created your material definitions, you need to combine them into a material table, which maps material IDs to the material definitions.

For example, if we want a homogenous material, and the input image has only the scalar value 127 for bone (and 0 for background), then we can make a material table like this:

materialTable = vtkbone.vtkboneMaterialTable() materialTable.AddMaterial (127, linear_material)

We created the material linear_material above. |

We must assign a material for every segmentation value present in the input.

The same material can be assigned to multiple material IDs.

For example, suppose the input image has segmentation values 100-103, and

we have created two different materials, materialA and materialB,

then we could create a vtkboneMaterialTable like this:

materialTable = vtkbone.vtkboneMaterialTable() materialTable.AddMaterial (100, materialA) materialTable.AddMaterial (101, materialB) materialTable.AddMaterial (102, materialB) materialTable.AddMaterial (103, materialB)

There are a couple of helper classes that simplify the creation of material tables for common cases. For a model with a homogenous material, we can use vtkboneGenerateHomogeneousMaterialTable . This class assigns a specified vtkboneMaterial to all the scalar values present in the input mesh. The size of the resulting material table depends on the number of distinct input values. For example,

mtGenerator = vtkbone.vtkboneGenerateHomogeneousMaterialTable() mtGenerator.SetMaterial (material1) mtGenerator.SetMaterialIdList (mesh.GetCellData().GetScalars()) mtGenerator.Update() materialTable = mtGenerator.GetOutput()

We could alternatively set SetMaterialIdList with the scalars from the image, rather than those of the mesh; the results will be the same.

Another useful helper class is vtkboneGenerateHommingaMaterialTable . Refer to the the section on the Homminga material model in the chapter on preparing models with n88modelgenerator.

Examples of defining materials and creating a material table are given in the tutorials Compressing a cube revisited using vtkbone, Deflection of a cantilevered beam: adding custom boundary conditions and loads and Advanced custom model tutorial: a screw pull-out test.

3.11. Creating a finite element model

At this stage, we combine the geometric mesh with the material table to create a vtkboneFiniteElementModel object. The most elementary way to do this is with a vtkboneFiniteElementModelGenerator , which can be used like this

modelGenerator = vtkbone.vtkboneFiniteElementModelGenerator() modelGenerator.SetInput (0, mesh) modelGenerator.SetInput (1, materialTable) modelGenerator.Update() model = modelGenerator.GetOutput()

After combining the mesh with the material table, all that is now required to

make a complete finite element model is to add boundary conditions and/or

applied loads, and to specify node and element sets for the post-processing.

For standard tests,

there are vtkbone filters to generate complete models, and these are

described below in Filters for creating standard tests.

3.12. Creating node and element sets

Before we can create boundary conditions or applied loads, we need to define node and/or element sets that specify all the nodes (or elements) to which we want to apply the boundary condition (displacement or applied load). Node and element sets are also used during post-processing. This will be discussed later in Setting post processing parameters.

Any number of node and element sets may be defined. They must be named, and they are accessed by name. The node and element sets are simply lists of node or element numbers, and are stored as vtkIdTypeArray . There are many ways to create node and element sets. A very simple way is to simply enumerate the node numbers (or element numbers). For example, if we wanted to create a node set of the nodes 8,9,10,11 , this would be the code:

nodes = array ([8,9,10,11]) nodes_vtk = numpy_to_vtk (nodes, deep=1, array_type=vtk.VTK_ID_TYPE)nodes_vtk.SetName ("example_node_set") model.AddNodeSet (nodes_vtk)

In Python, the most flexible way to handle array data is with

numpy arrays, however the data must be passed to VTK objects as

vtkDataArray . numpy_to_vtk does the conversion. |

VTK offers many ways to select Points and Cells, for example by geometric criteria.

As an example of this, refer to the screw_pullout_tutorial where a node set

is selected from nodes located on a particular rough surface, and subject to additional

criterion r1 < r < r2 , where r is the radius from a screw axis.

vtkbone also offers some utility functions for identifying node and elements

sets. These can be found in vtkboneSelectionUtilities .

Frequently, we require sets corresponding to the faces of the model (i.e. the boundaries of the image). In this case, we may use the class vtkboneApplyTestBase . vtkboneApplyTestBase is a subclass of vtkboneFiniteElementModelGenerator , with the extra functionality of creating pre-defined sets. vtkboneApplyTestBase implements the concept of a Test Frame and a Data Frame. Refer to test orientation in the chapter on n88modelgenerator. vtkboneApplyTestBase creates the node and element sets given in the following table. Note that in each case there is both a node and element set, with the same name.

| Set name | Consists of |

|---|---|

face_z0 |

nodes/elements on the bottom surface (z=zmin) in the Test Frame. |

face_z1 |

nodes/elements on the top surface (z=zmax) in the Test Frame. |

face_x0 |

nodes/elements on the x=xmin surface in Test Frame. |

face_x1 |

nodes/elements on the x=xmax surface in Test Frame. |

face_y0 |

nodes/elements on the y=ymin surface in Test Frame. |

face_y1 |

nodes/elements on the y=ymax surface in Test Frame. |

The sets “face_z0” and “face_z1” are of particular importance, as the standard tests are applied along the z axis in the Test Frame.

vtkboneApplyTestBase has options UnevenTopSurface()

and UnevenBottomSurface() by which we can choose to have “face_z0” and/or “face_z1”

as the set of nodes/elements on an uneven surface, instead of the boundary of the

model. This is especially useful when the object in the image does not

intersect the top/bottom boundaries

of the image. See further discussion on uneven surfaces in

the chapter on n88modelgenerator. The two sets “face_z0” and “face_z1” can also be limited to

a specific material, as defined by material ID.

vtkboneApplyTestBase is used identically to vtkboneFiniteElementModelGenerator , except that additional optional settings may be set if desired. For example, here we define a model with a test axis in the y direction, and specify that we want the set “face_z1” to be the uneven surface in the image, where we are limiting the search for the uneven surface to a maximum distance of 0.5 from the image boundary.

modelGenerator = vtkbone.vtkboneApplyTestBase() modelGenerator.SetInput (0, mesh) modelGenerator.SetInput (1, materialTable) modelGenerator.SetTestAxis (vtkbone.vtkboneApplyTestBase.TEST_AXIS_Y) modelGenerator.UnevenTopSurfaceOn() modelGenerator.UseTopSurfaceMaximumDepthOn() modelGenerator.SetTopSurfaceMaximumDepth (0.5) modelGenerator.Update() model = modelGenerator.GetOutput()

An example of using the standard node and element sets from vtkboneApplyTestBase is given in Deflection of a cantilevered beam: adding custom boundary conditions and loads. The tutorial Advanced custom model tutorial: a screw pull-out test demonstrates a sophisticated selection of custom node sets.

3.13. Adding boundary conditions

Once we have created the node sets, creating a boundary condition applied

to the nodes of a particular node set is straightforward and can be done

with the method ApplyBoundaryCondition() of vtkboneFiniteElementModel . For example,

to fix the bottom nodes and apply a displacement of 0.1 to the top nodes,

we could do this

model.ApplyBoundaryCondition (

"face_z0",

vtkbone.vtkboneConstraint.SENSE_Z,  0,

"bottom_fixed")

0,

"bottom_fixed")  model.ApplyBoundaryCondition (

"face_z1",

vtkbone.vtkboneConstraint.SENSE_Z,

-0.1,

model.ApplyBoundaryCondition (

"face_z1",

vtkbone.vtkboneConstraint.SENSE_Z,

-0.1,  "bottom_fixed")

"bottom_fixed")

vtkbone.vtkboneConstraint.SENSE_Z has the value 2 (see vtkboneConstraint ). It is possible

just to use that value 2 here, but SENSE_Z is more informative. |

|

| This is the user-defined name assigned to the boundary condition. Every constraint must have a unique name. | |

| Negative if we want compression. |

The “sense” is the axis direction along which the displacement is applied.

The other axis directions remain free unless you also specify a value for

them. It is quite possible, and common, to call ApplyBoundaryCondition()

three times on the same node set, once for each sense.

ApplyBoundaryCondition() can take different types of arguments, for example

arrays of senses, displacements and node numbers. Refer to the API documentation

for vtkboneFiniteElementModel . There is also a convenience method

FixNodes(), which is a quick way to set all senses to a zero displacement.

Boundary conditions are implemented within vtkboneFiniteElementModel as a specific type of vtkboneConstraint . All the constraints associated with a vtkboneFiniteElementModel are contained in a vtkboneConstraintCollection . It is possible to modify either vtkboneConstraintCollection or a particular vtkboneConstraint manually. For example, we could create a boundary condition on two nodes in the following manner. This is somewhat laborious and usually unnecessary, but it can be adapted to create unusual boundary conditions.

node_ids = array([3,5])

node_ids_vtk = numpy_to_vtk (node_ids, deep=1, array_type=vtk.VTK_ID_TYPE)

senses = array ([0,2]) # x-axis, z-axis

senses_vtk = numpy_to_vtk (senses, deep=1, array_type=vtk.VTK_ID_TYPE)

senses_vtk.SetName ("SENSE")

values = array([0.1,0.1])

values_vtk = numpy_to_vtk (values, deep=1, array_type=vtk.VTK_ID_TYPE)

values_vtk.SetName ("VALUE")

constraint = vtkbone.vtkboneConstraint()

constraint.SetName ("a_custom_boundary_condition")

constraint.SetIndices (node_ids)

constraint.SetConstraintType (vtkbone.vtkboneConstraint::DISPLACEMENT)

constraint.SetConstraintAppliedTo (vtkbone.vtkboneConstraint::NODES)

constraint.GetAttributes().AddArray (senses)

constraint.GetAttributes().AddArray (values)

model.GetConstraints().AddItem (constraint)

Examples of applying boundary conditions are given in the tutorials Deflection of a cantilevered beam: adding custom boundary conditions and loads and Advanced custom model tutorial: a screw pull-out test.

3.14. Adding applied loads

Creating an applied load is similar to creating a displacement boundary

condition. They are both implemented as vtkboneConstraint . However, while displacement

boundary conditions are applied to nodes, applied loads are applied to elements; in fact they are applied to a particular

face of an element (or to the body of an element).

Therefore, the ApplyLoad() method of

vtkboneFiniteElementModel takes the name of an element set, and

has an additional input argument which specifies to which faces of the

elements the load is applied. Here is an example of creating an

applied load on the top surface of the model:

model.ApplyLoad (

"face_z1",  vtkbone.vtkboneConstraint.FACE_Z1_DISTRIBUTION,

vtkbone.vtkboneConstraint.SENSE_Z,

vtkbone.vtkboneConstraint.FACE_Z1_DISTRIBUTION,

vtkbone.vtkboneConstraint.SENSE_Z,  6900,

6900,  "bottom_fixed")

"bottom_fixed")

| Refers to the element set “face_z1”, not the corresponding node set, which very often also exists with the same name. | |

| In this example, the applied force is perpendicular to the specified element face, but it is not required to be so. | |

| This is the total load applied. The load is evenly distributed between all of the element faces. |

To apply forces along non-axis directions, call ApplyLoad() multiple times

with different senses.

As with displacement boundary conditions, we can create an applied force manually, if we require more flexibility. It will look something like this:

element_ids = array([3,5])

element_ids_vtk = numpy_to_vtk (element_ids, deep=1, array_type=vtk.VTK_ID_TYPE)

distributions = ones ((2,)) * vtkbone.vtkboneConstraint.FACE_Z1_DISTRIBUTION

distributions_vtk = numpy_to_vtk (distributions, deep=1, array_type=vtk.VTK_ID_TYPE)

distributions_vtk.SetName ("DISTRIBUTION")

senses = array ([0,2]) # x-axis, z-axis

senses_vtk = numpy_to_vtk (senses, deep=1, array_type=vtk.VTK_ID_TYPE)

senses_vtk.SetName ("SENSE")

total_load = 6900

values = total_load * ones(2, float) / 2

values_vtk = numpy_to_vtk (values, deep=1, array_type=vtk.VTK_ID_TYPE)

values_vtk.SetName ("VALUE")

constraint = vtkbone.vtkboneConstraint()

constraint.SetName ("a_custom_applied_load")

constraint.SetIndices (element_ids)

constraint.SetConstraintType (vtkbone.vtkboneConstraint::FORCE)

constraint.SetConstraintAppliedTo (vtkbone.vtkboneConstraint::ELEMENTS)

constraint.GetAttributes().AddArray (distributions)

constraint.GetAttributes().AddArray (senses)

constraint.GetAttributes().AddArray (values)

model.GetConstraints().AddItem (constraint)

|

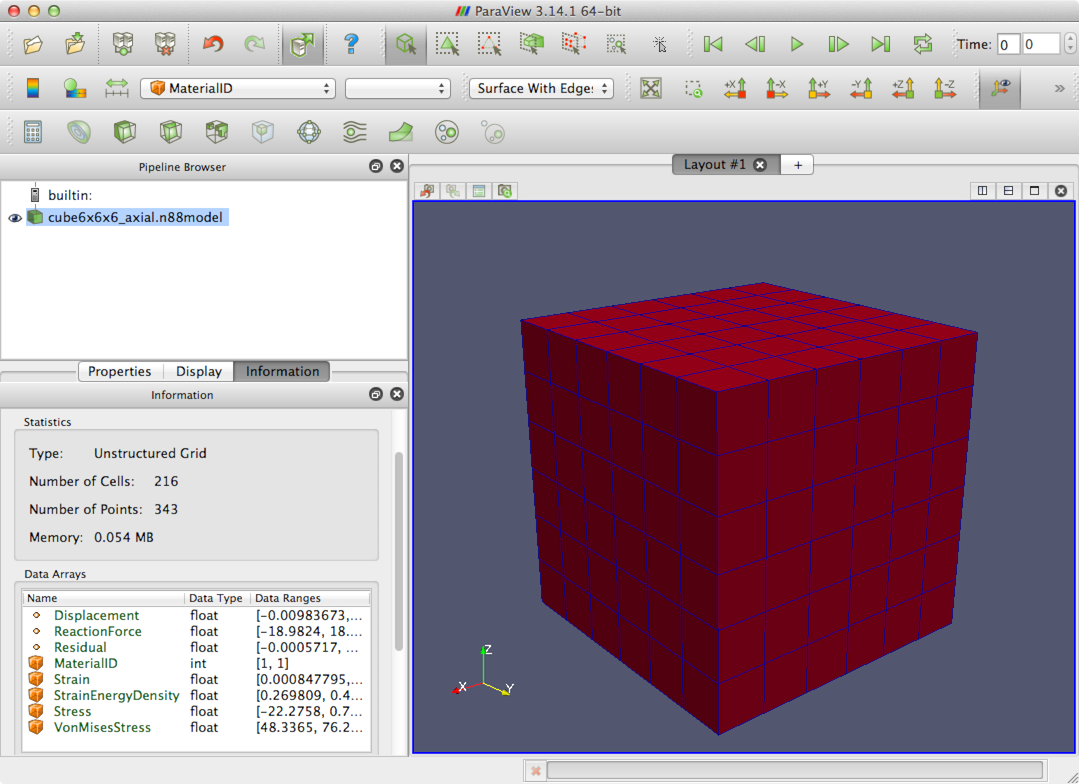

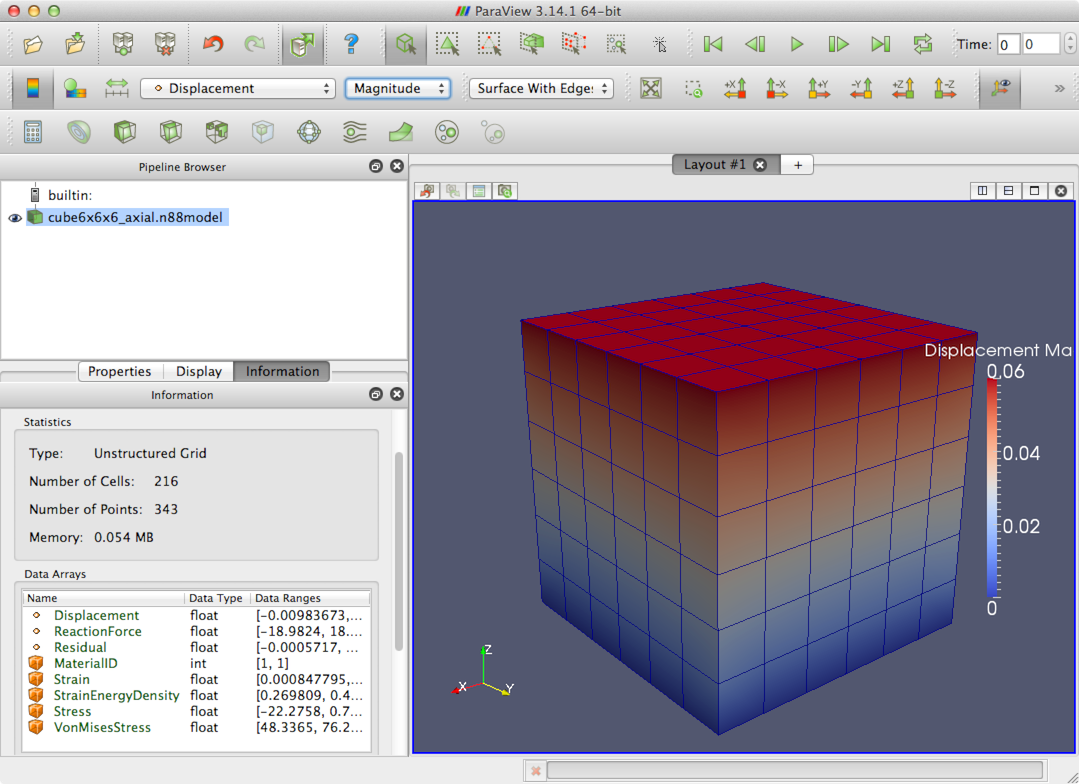

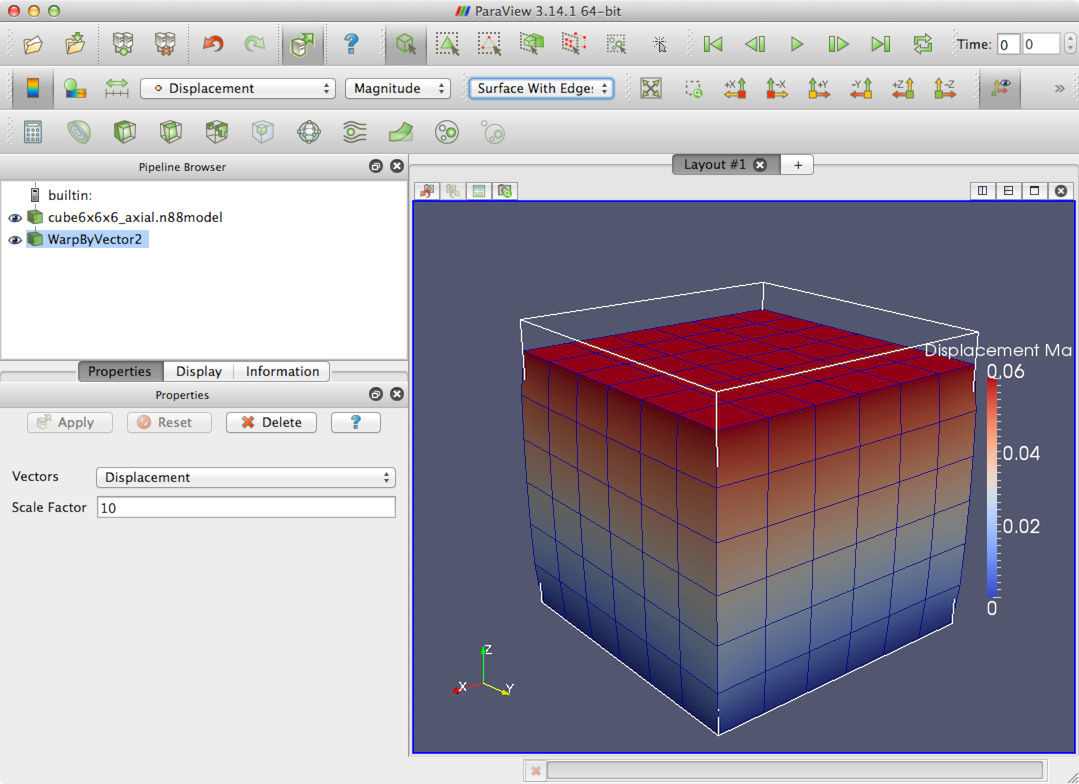

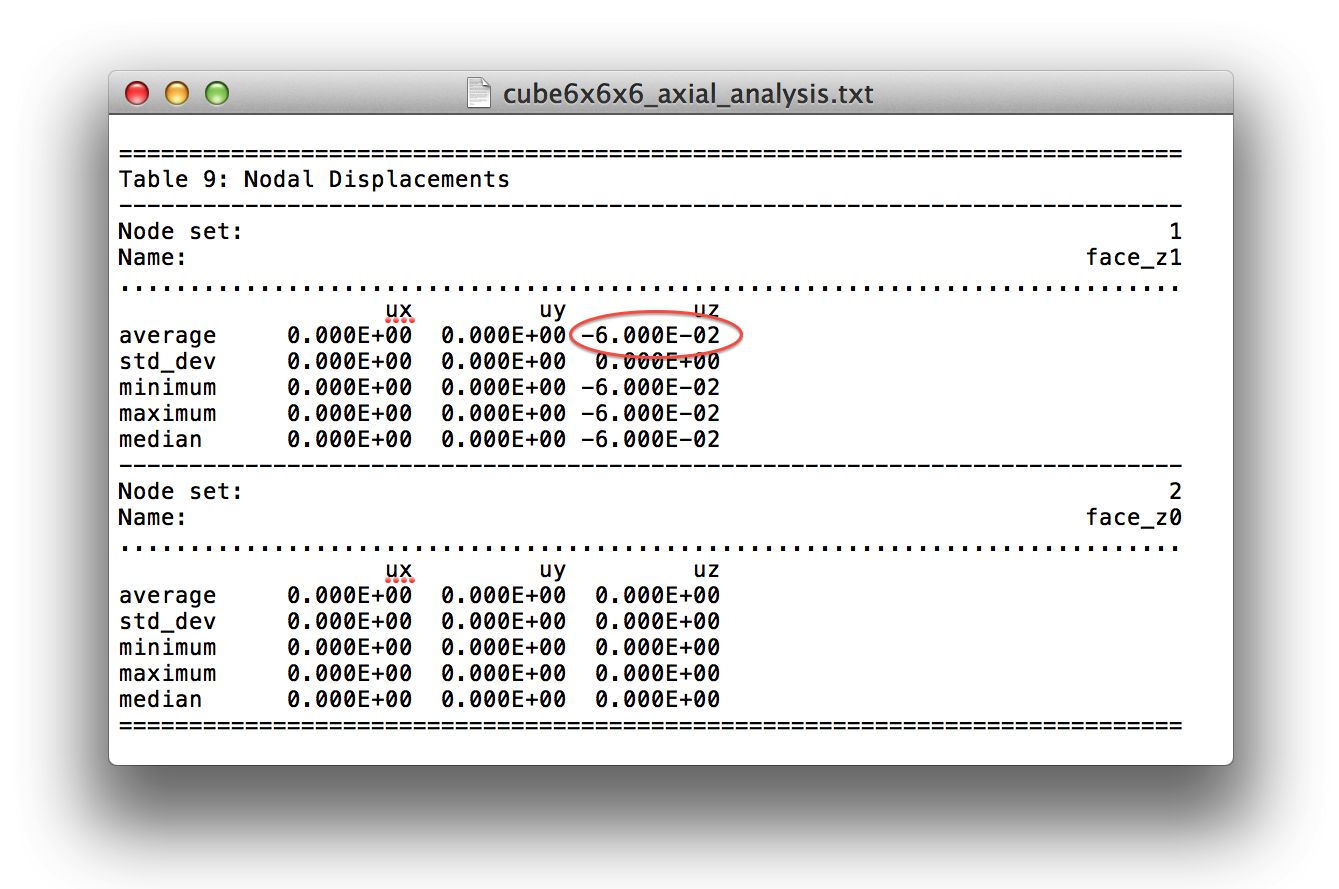

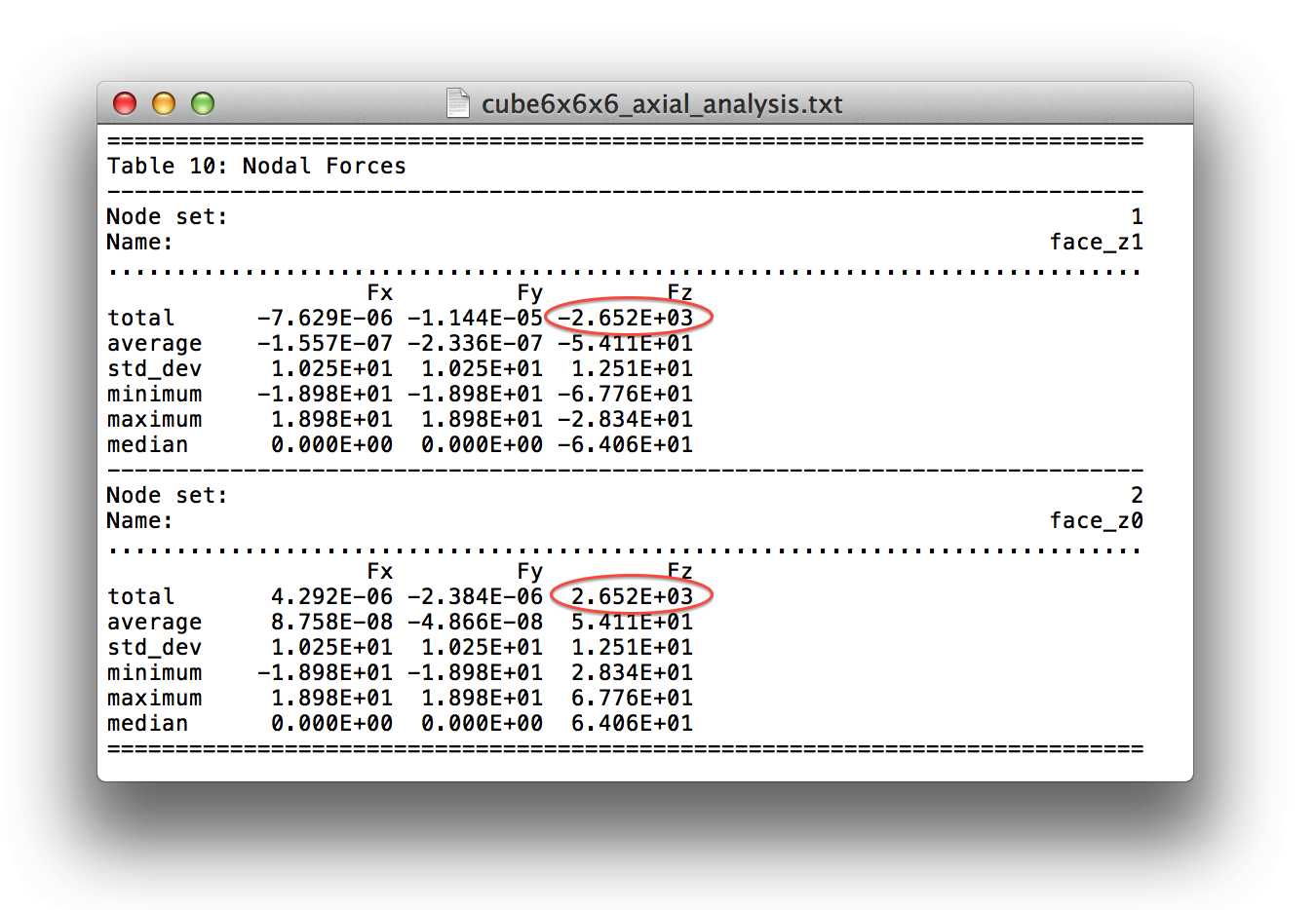

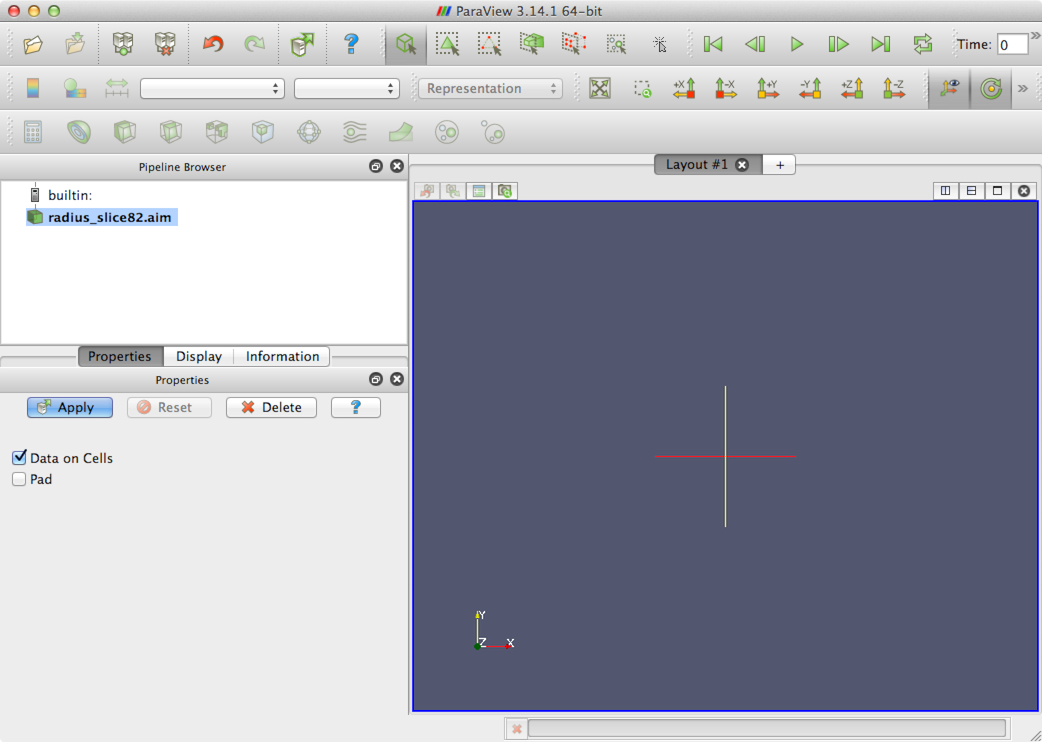

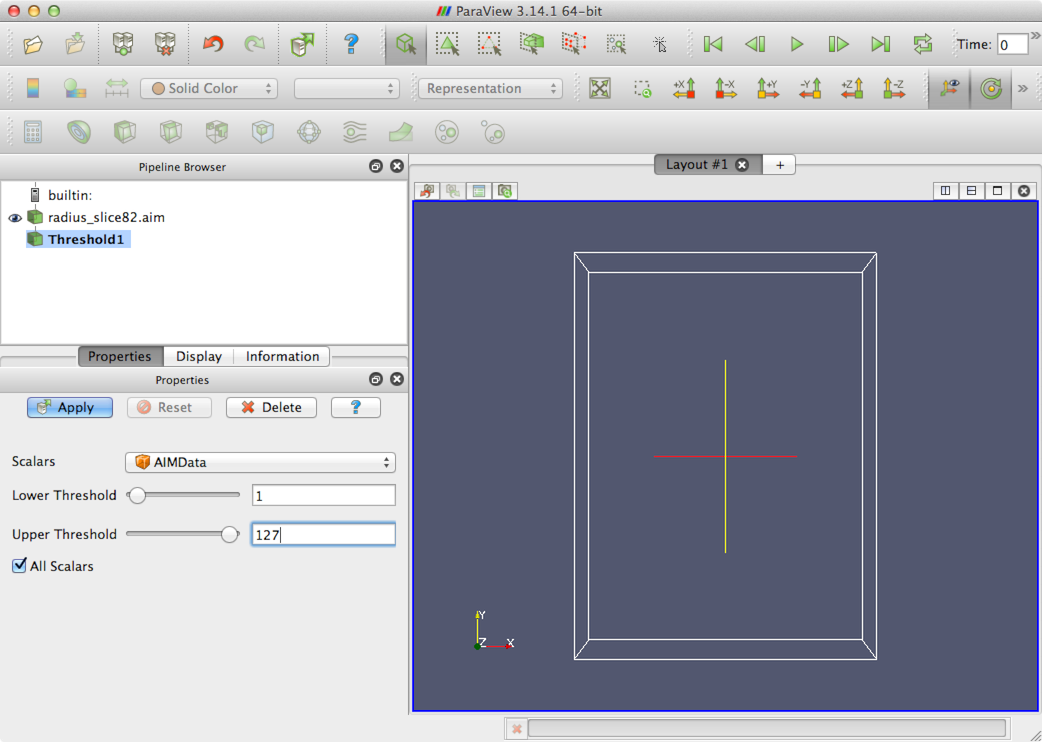

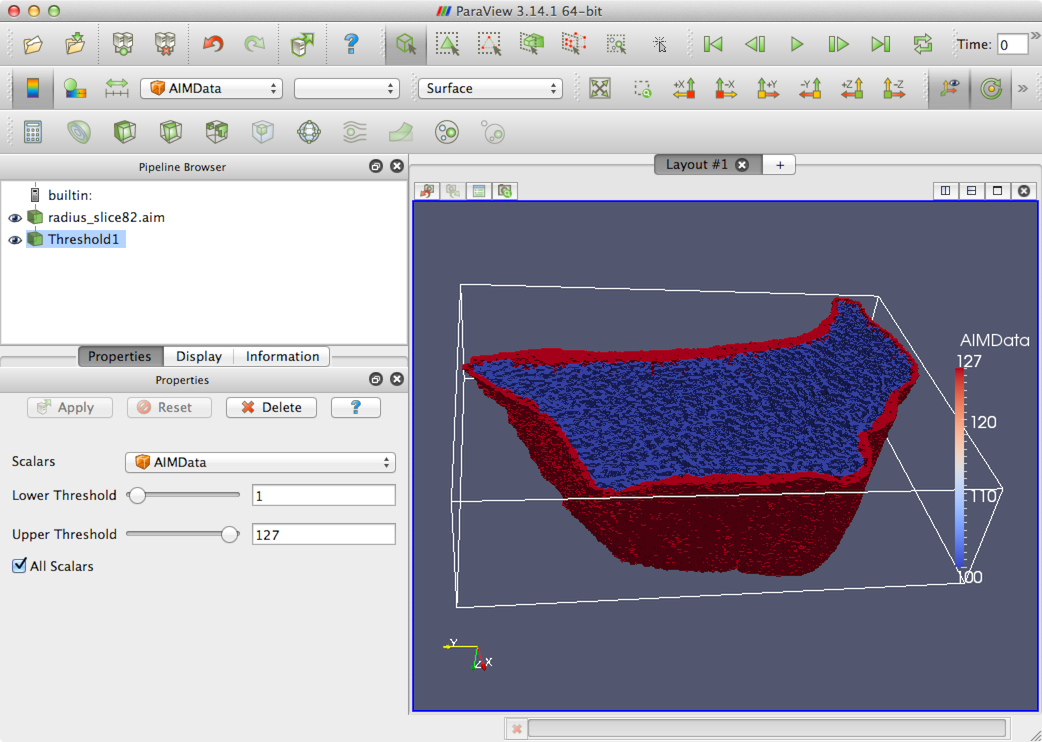

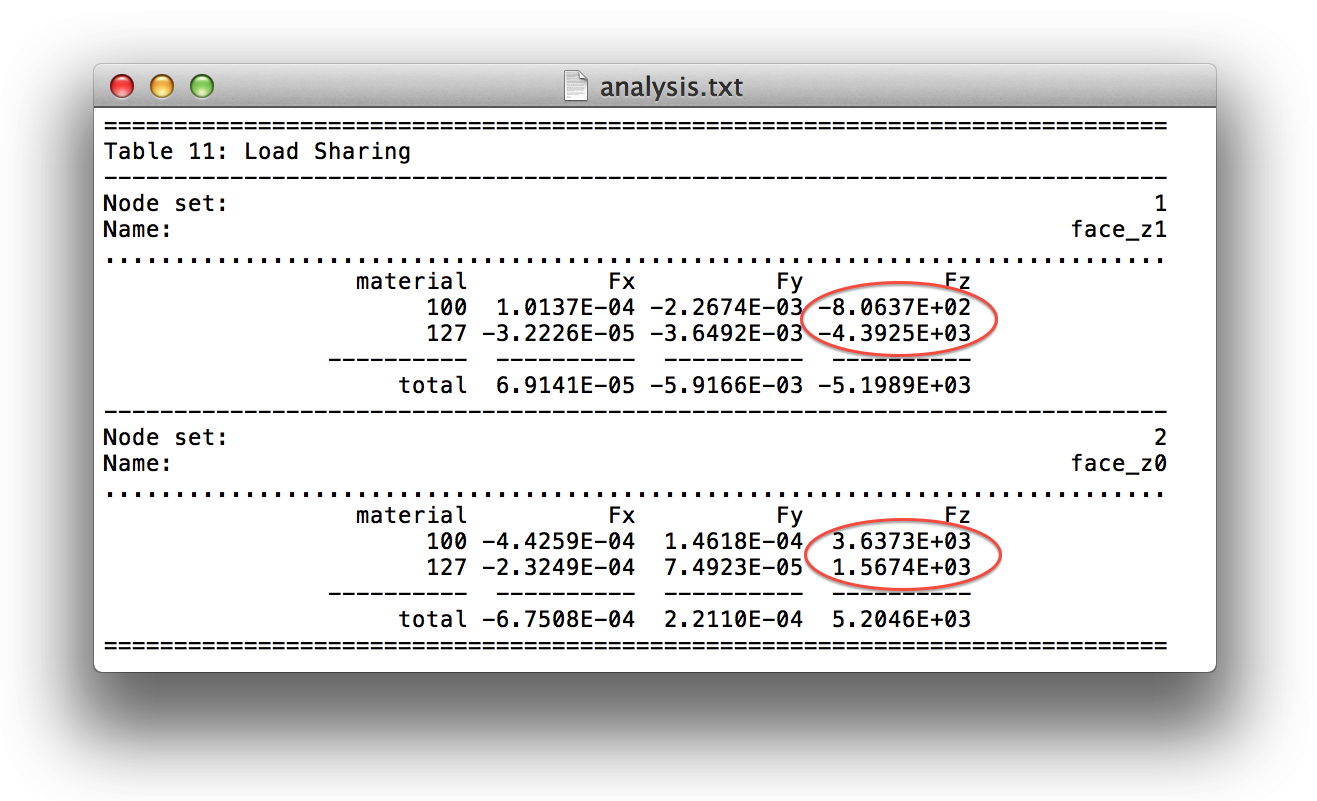

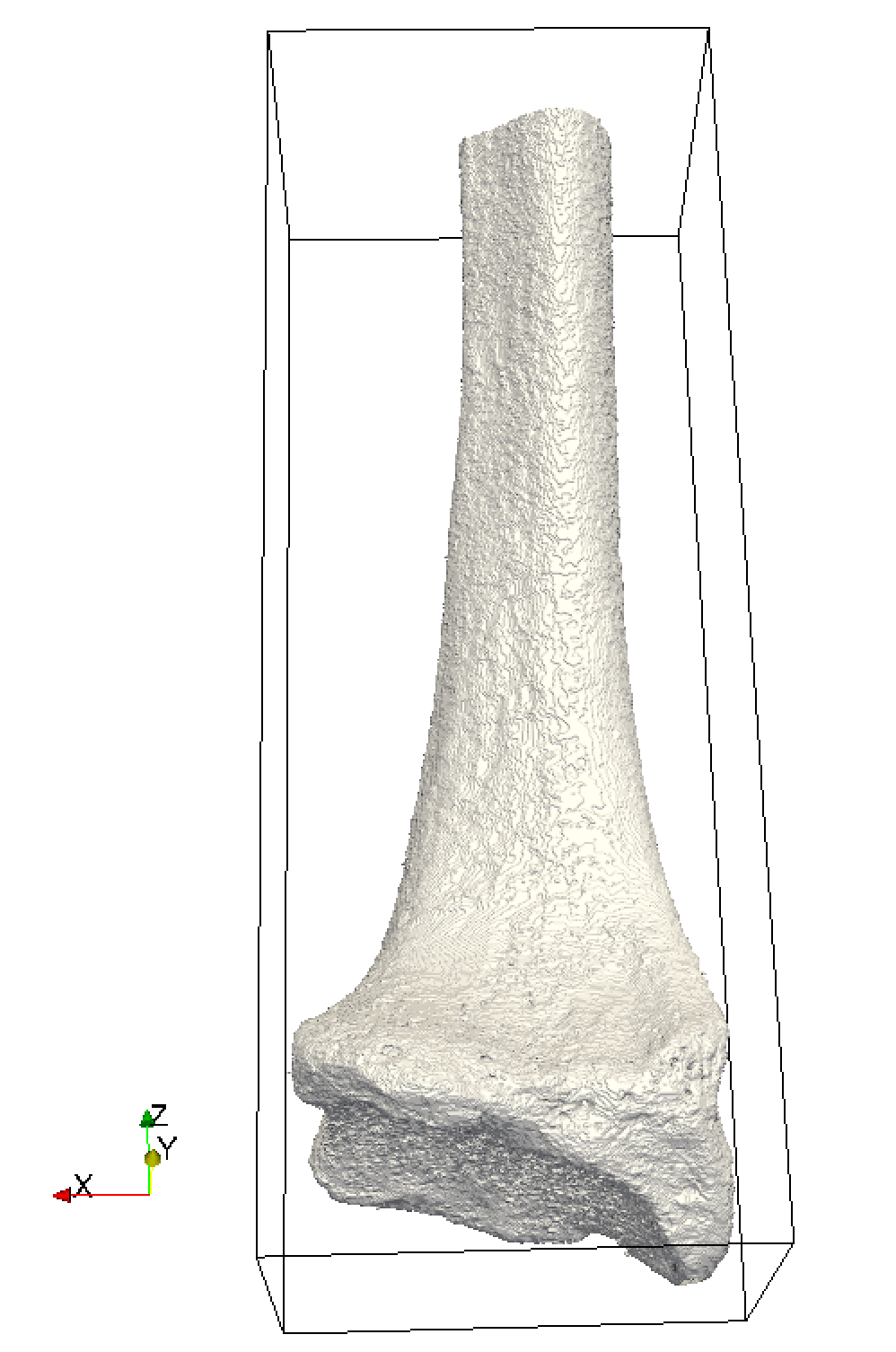

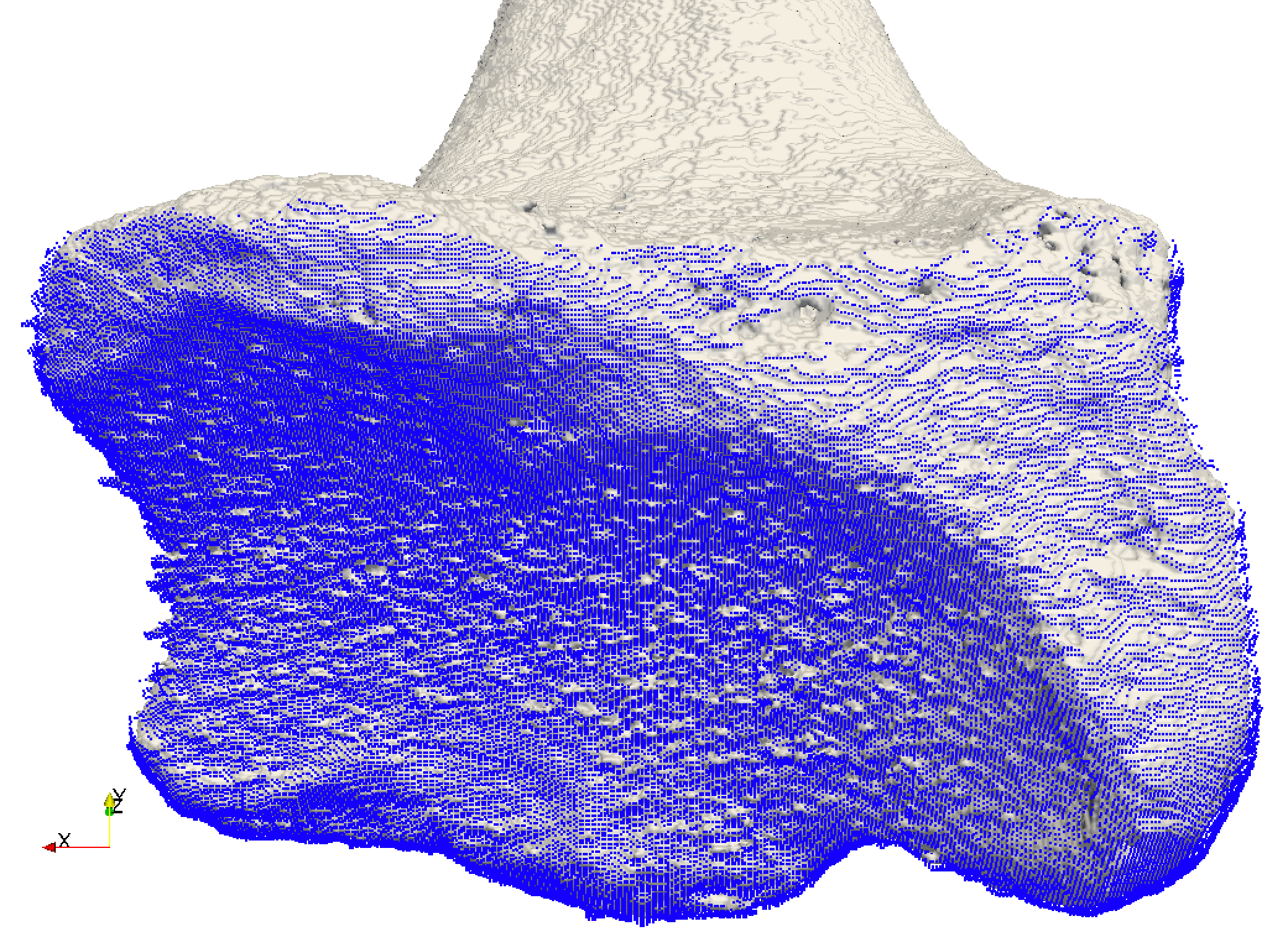

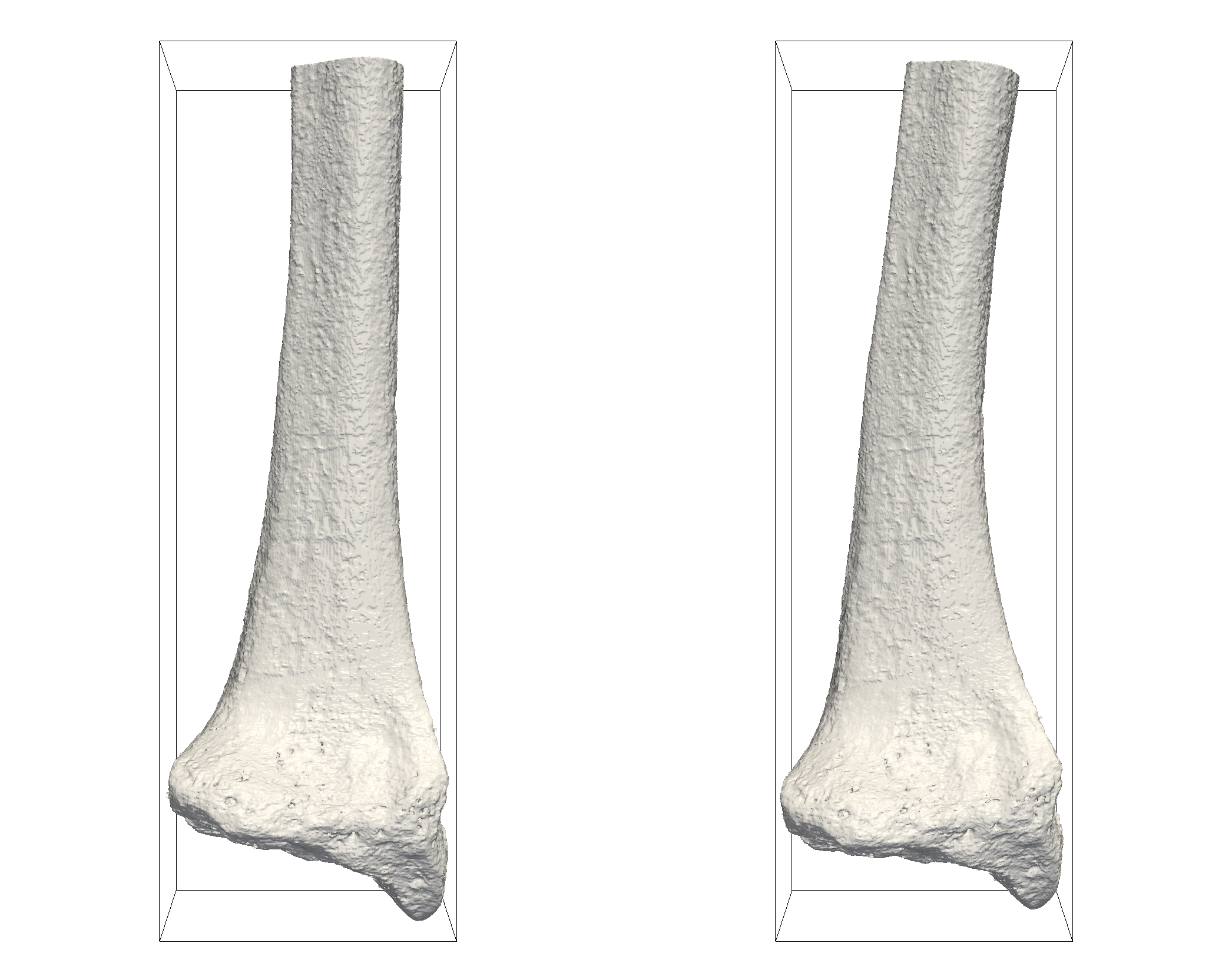

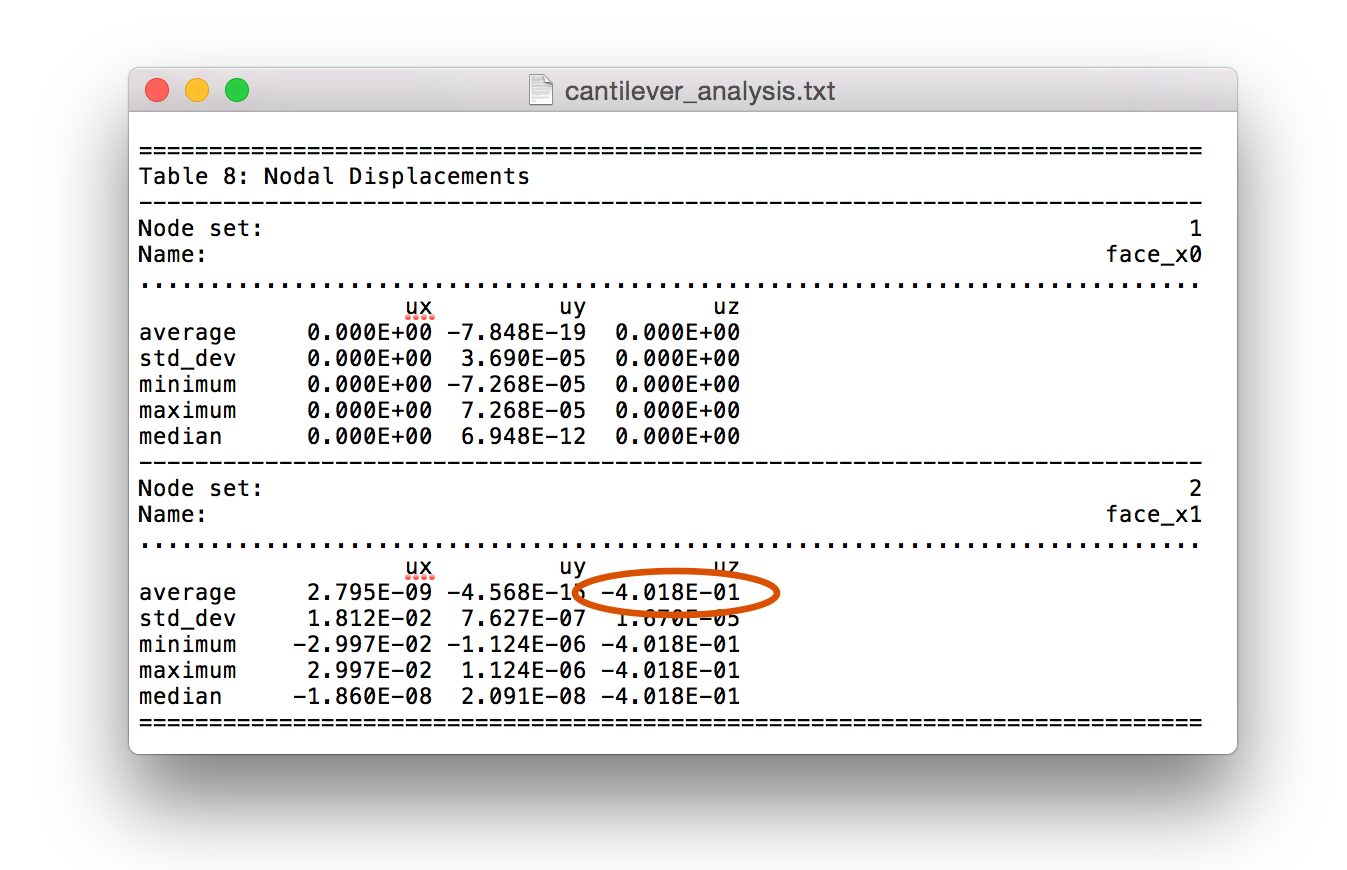

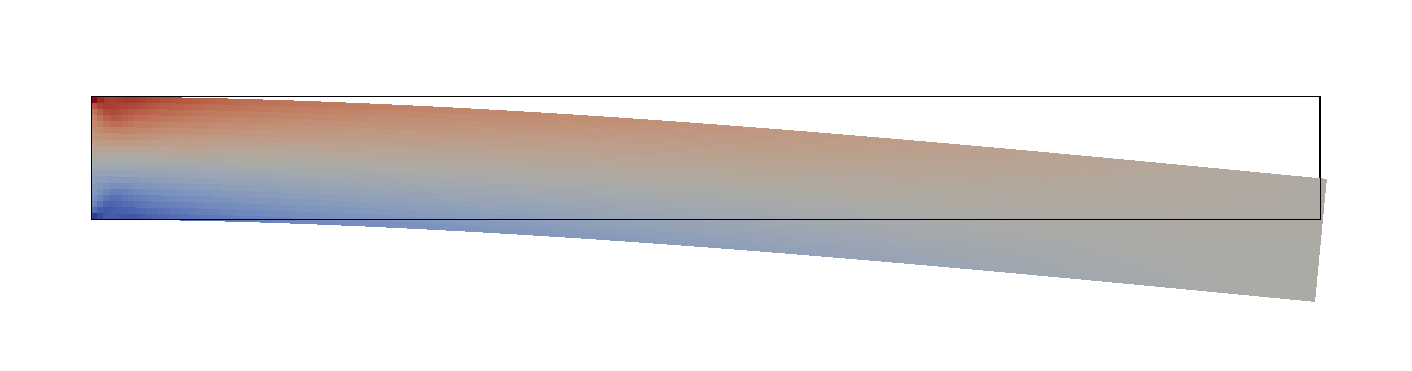

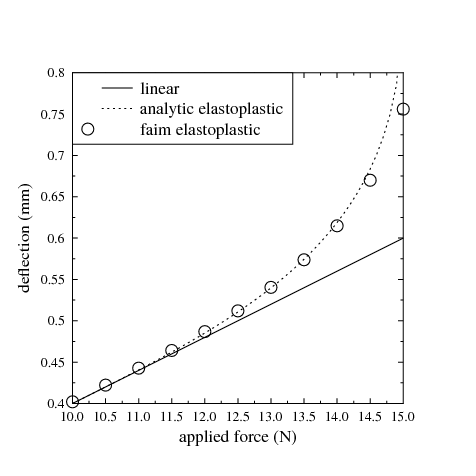

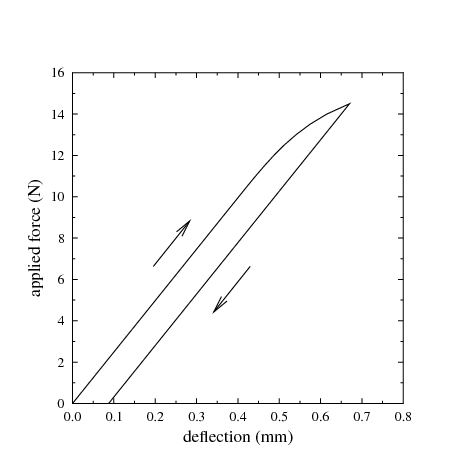

|